github链接:https://github.com/MSzgy/Evaluating-AI-Agents

Introduction

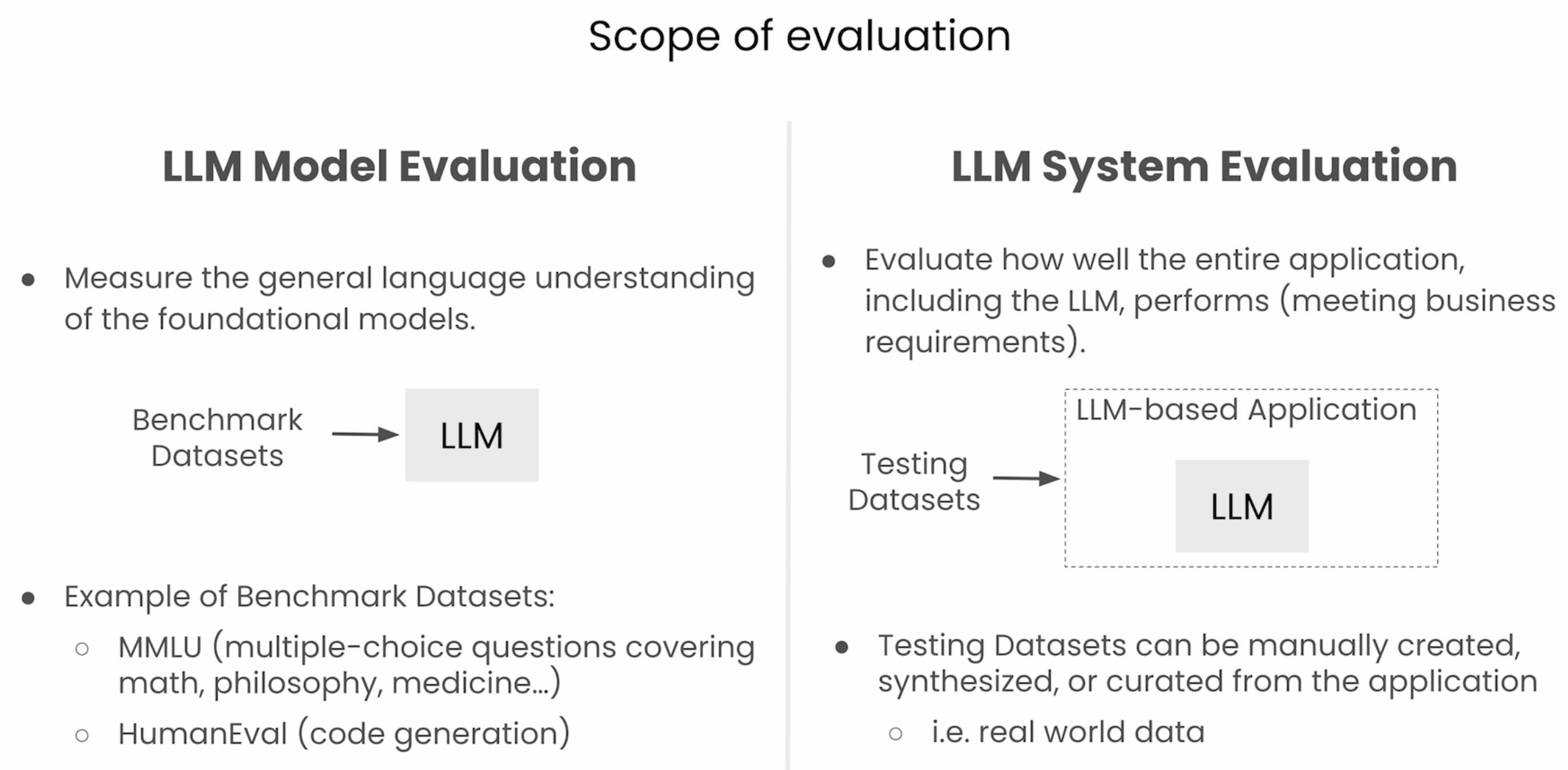

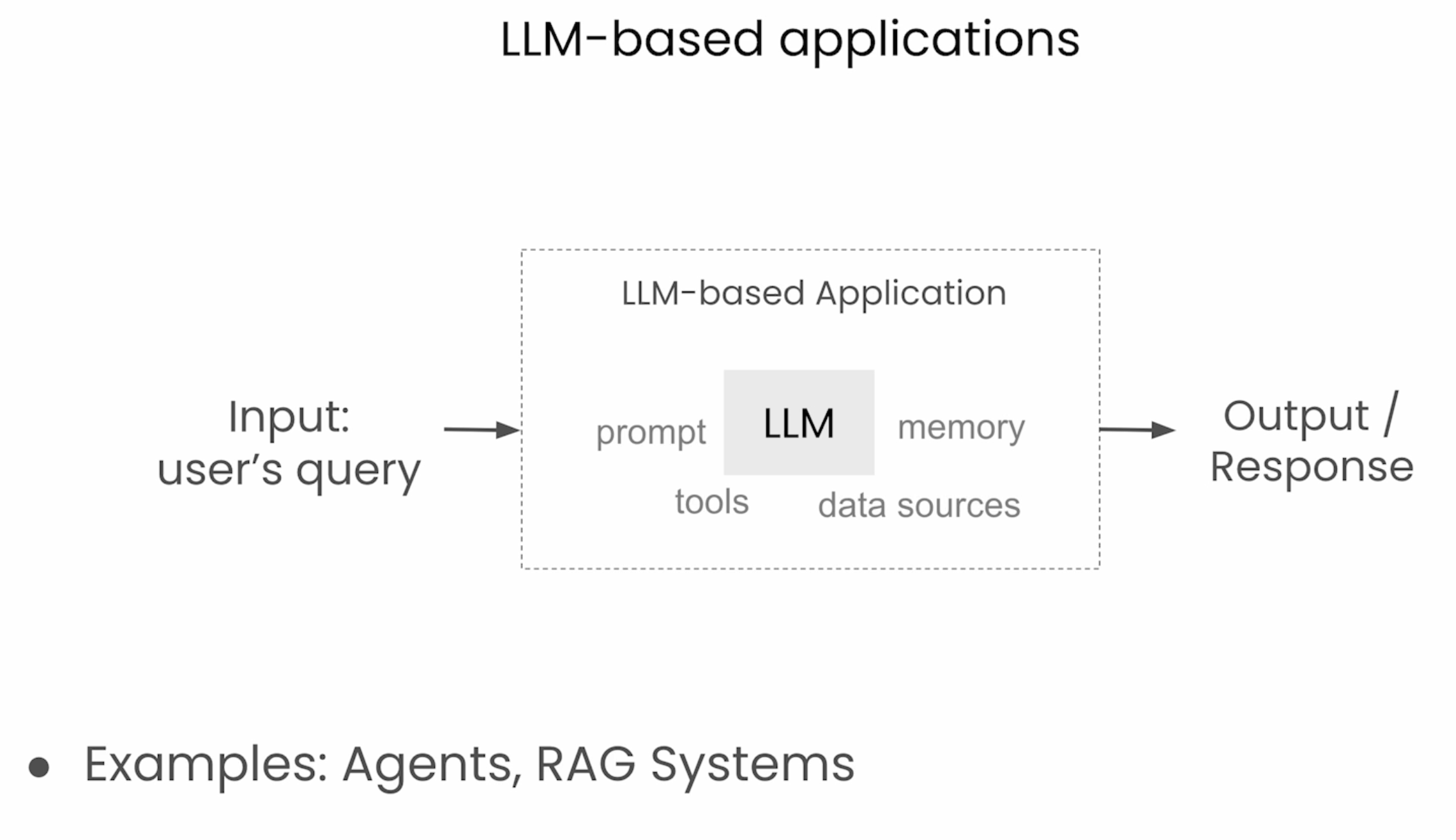

Evaluation in the time of LLMs

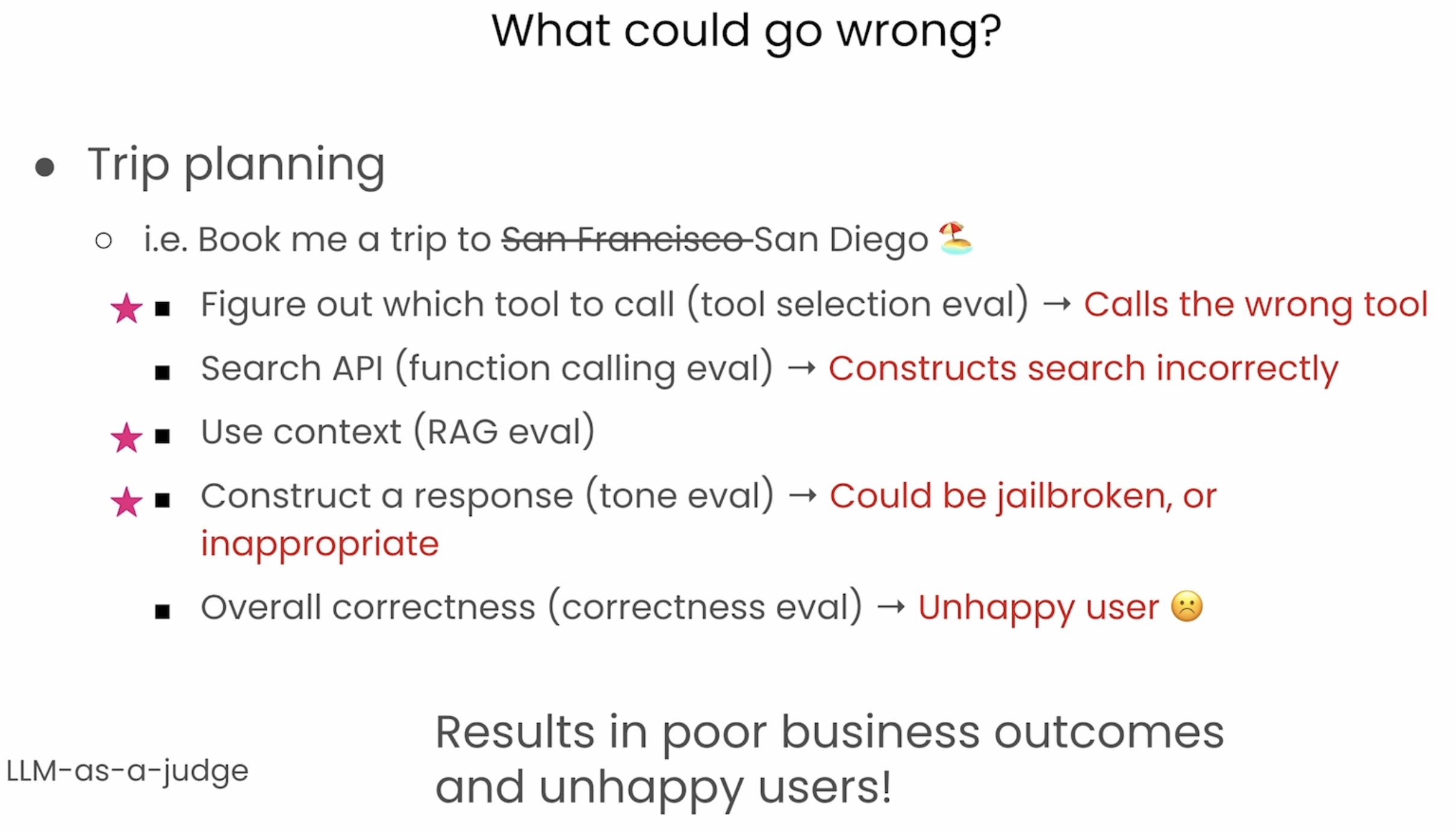

Agents可能会有一些如下的错误:

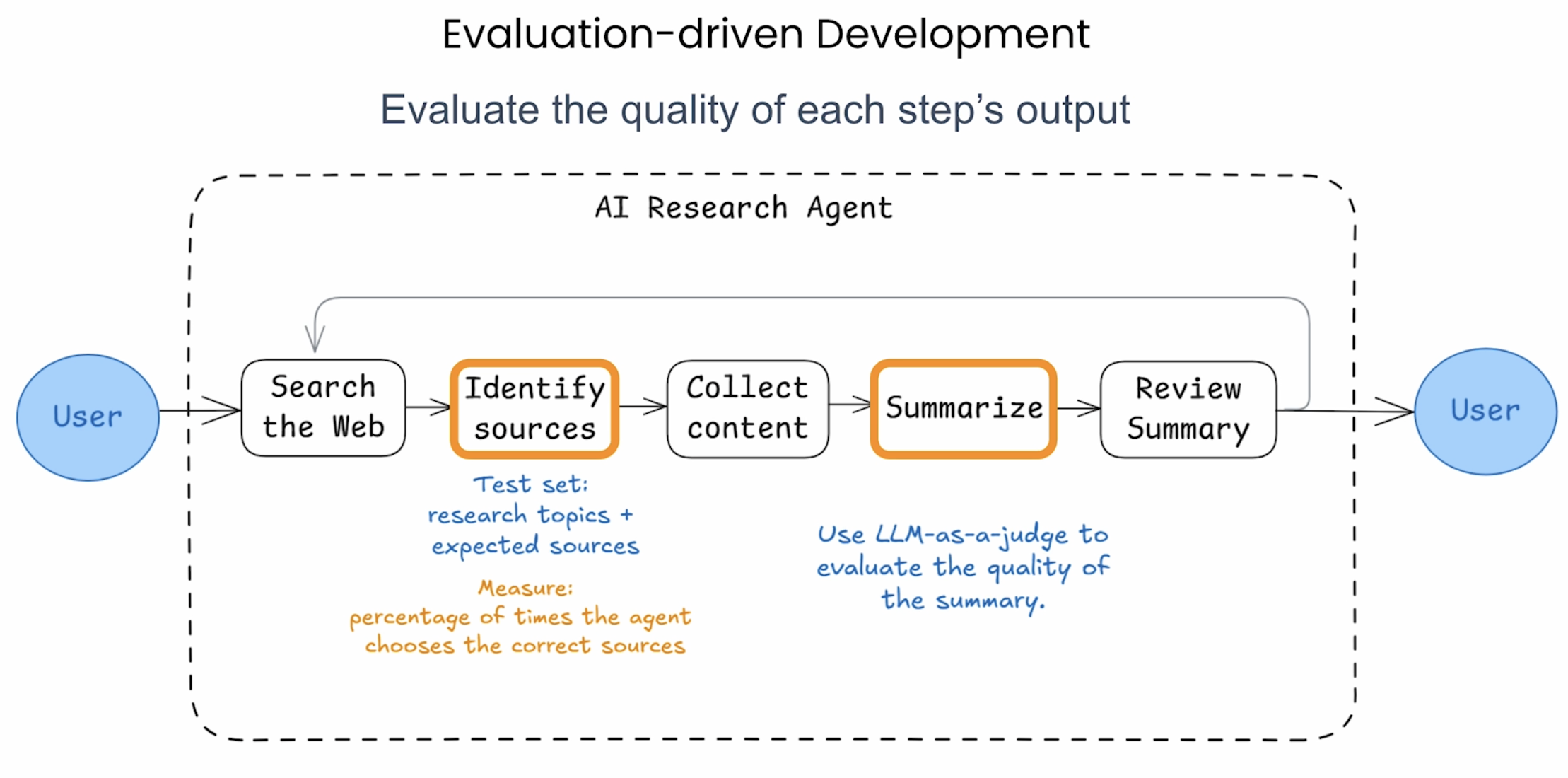

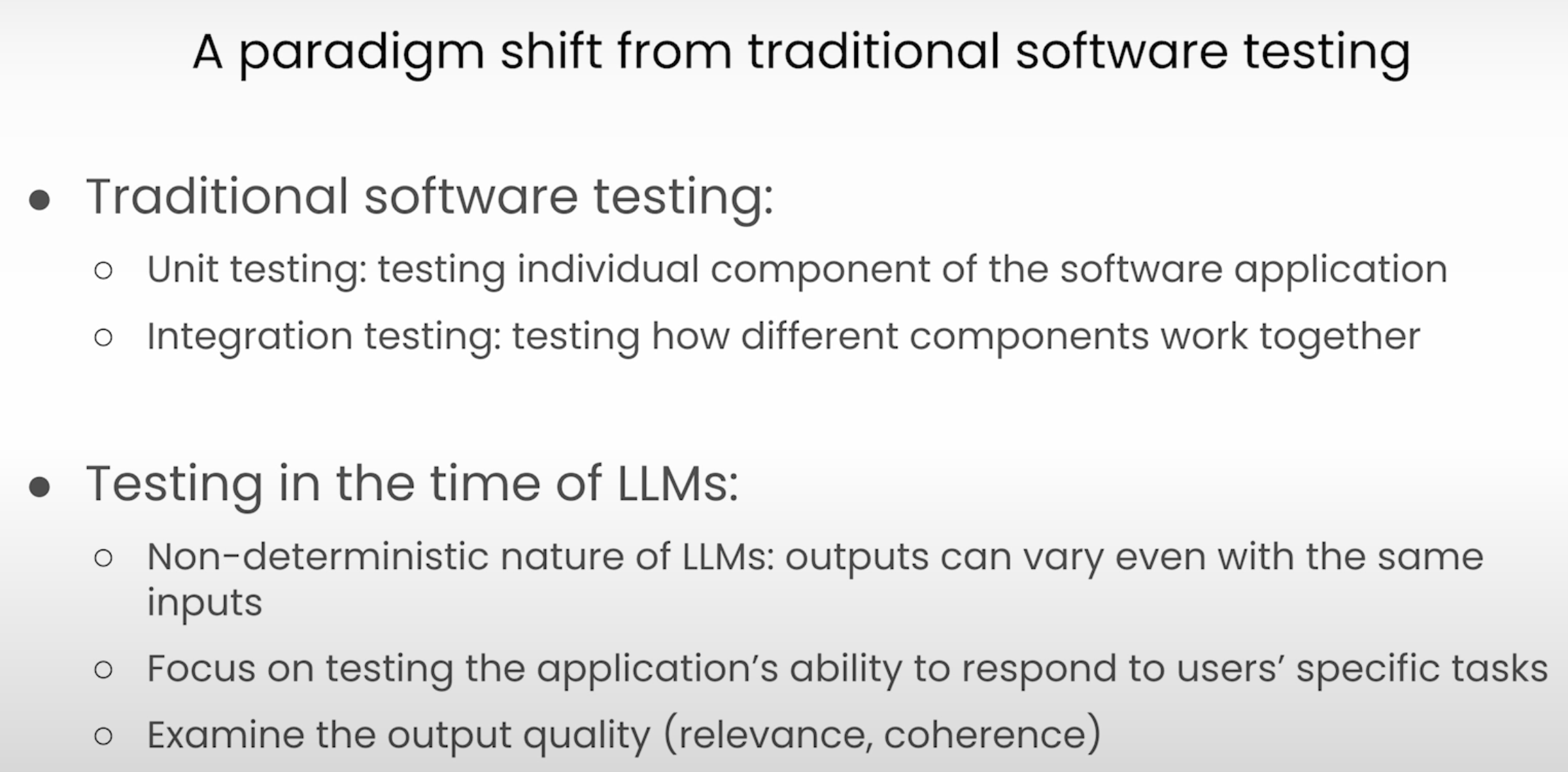

像传统的软件一样需要单元测试、集成测试,而对于Agent来讲,也需要一些测试去评估效果以及质量:

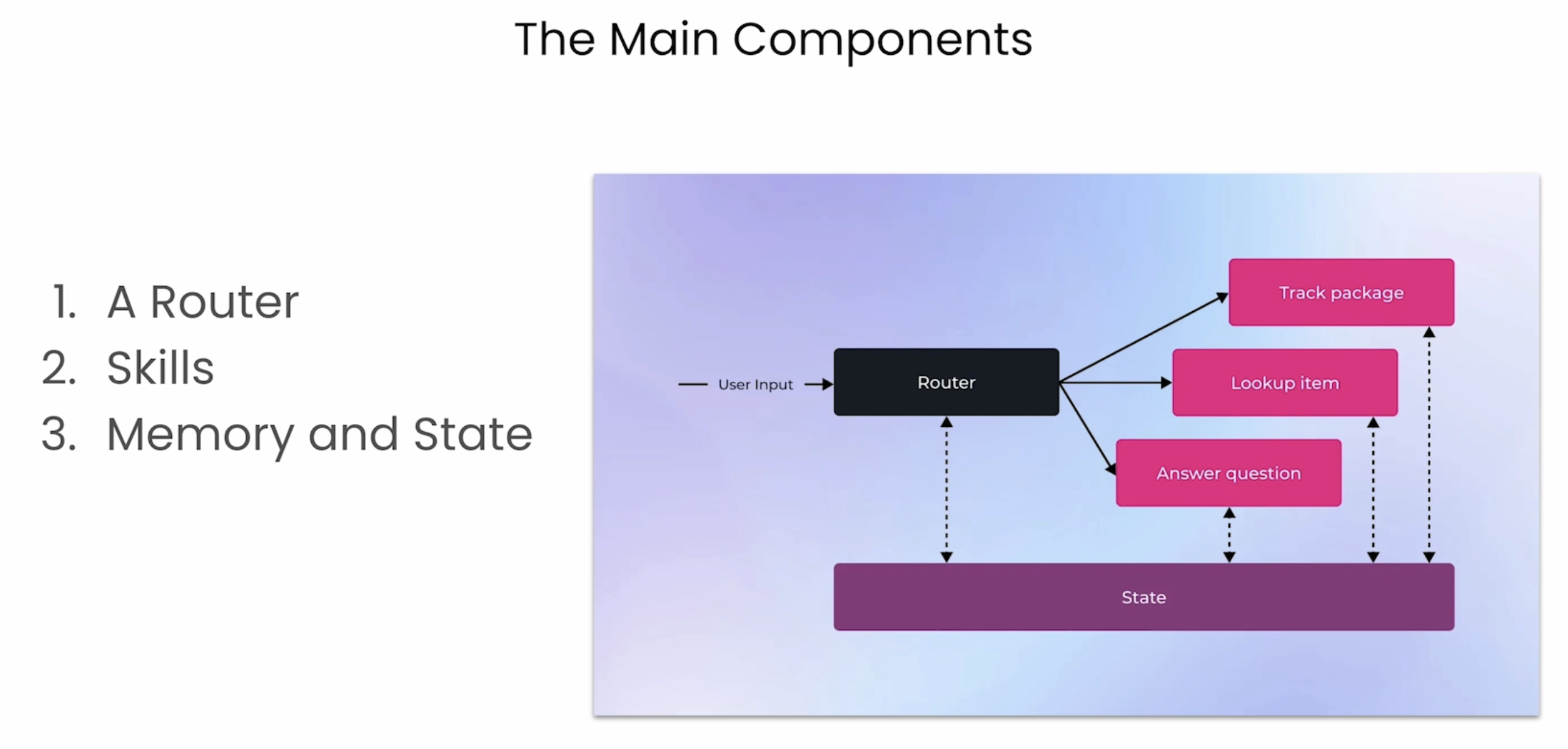

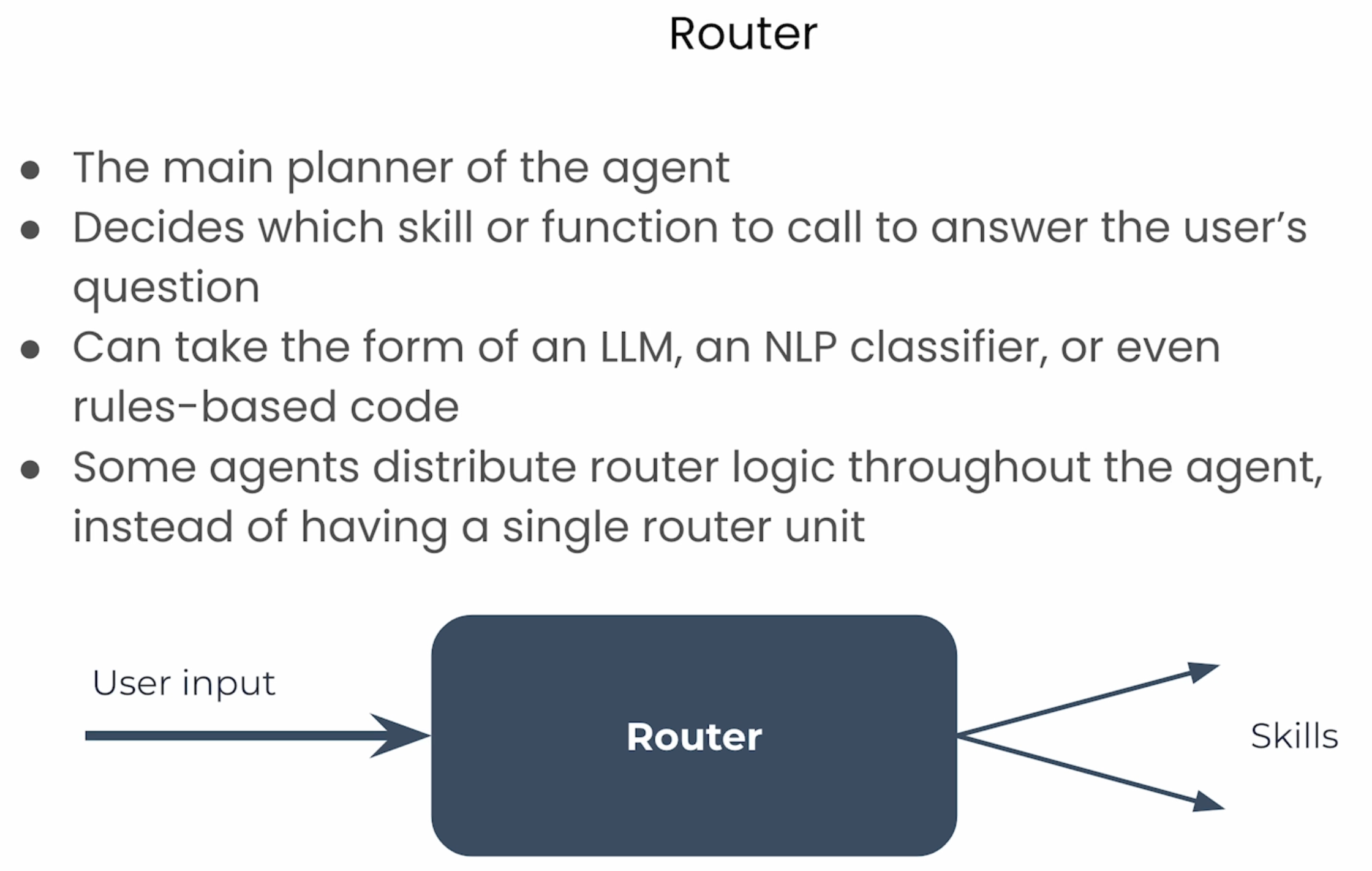

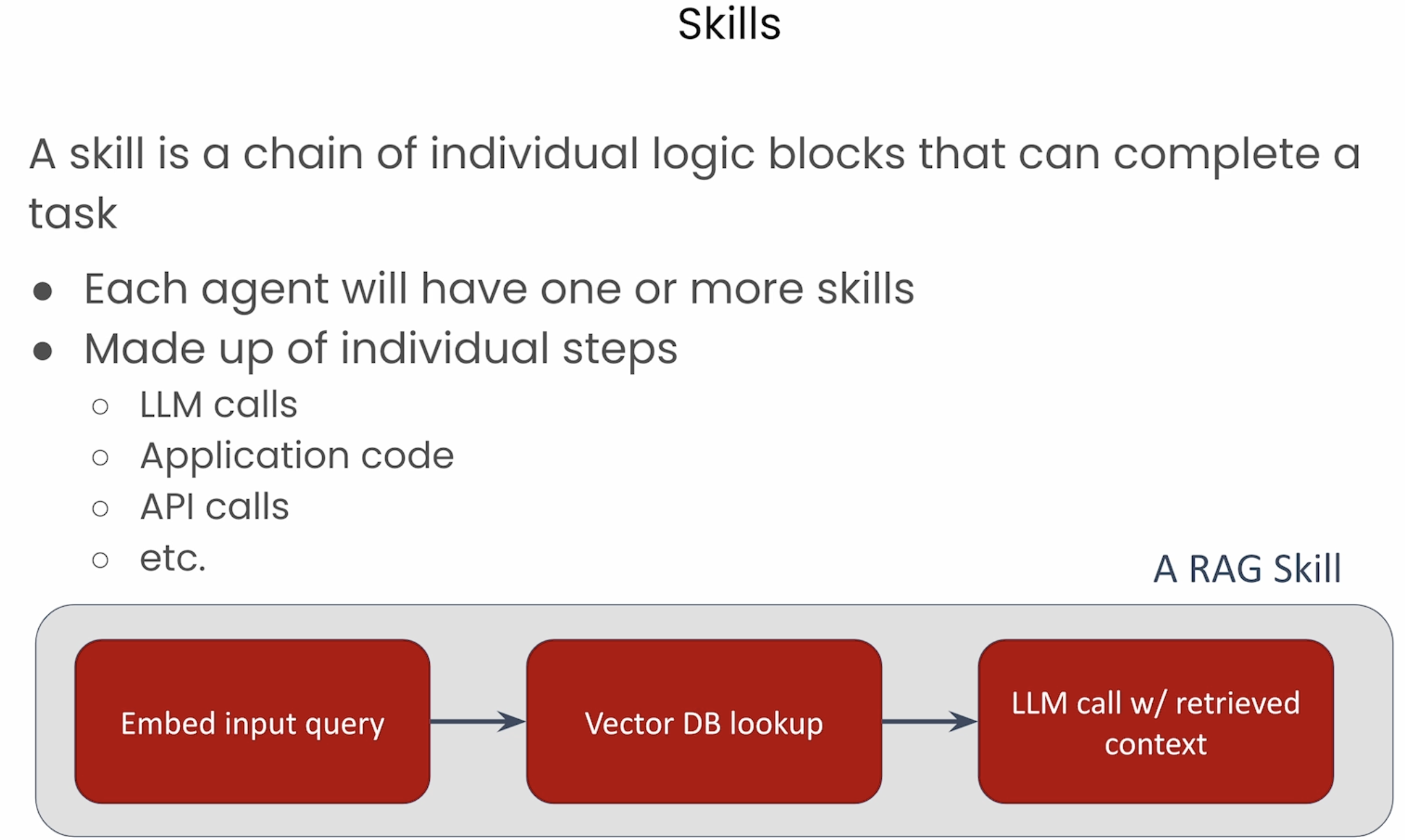

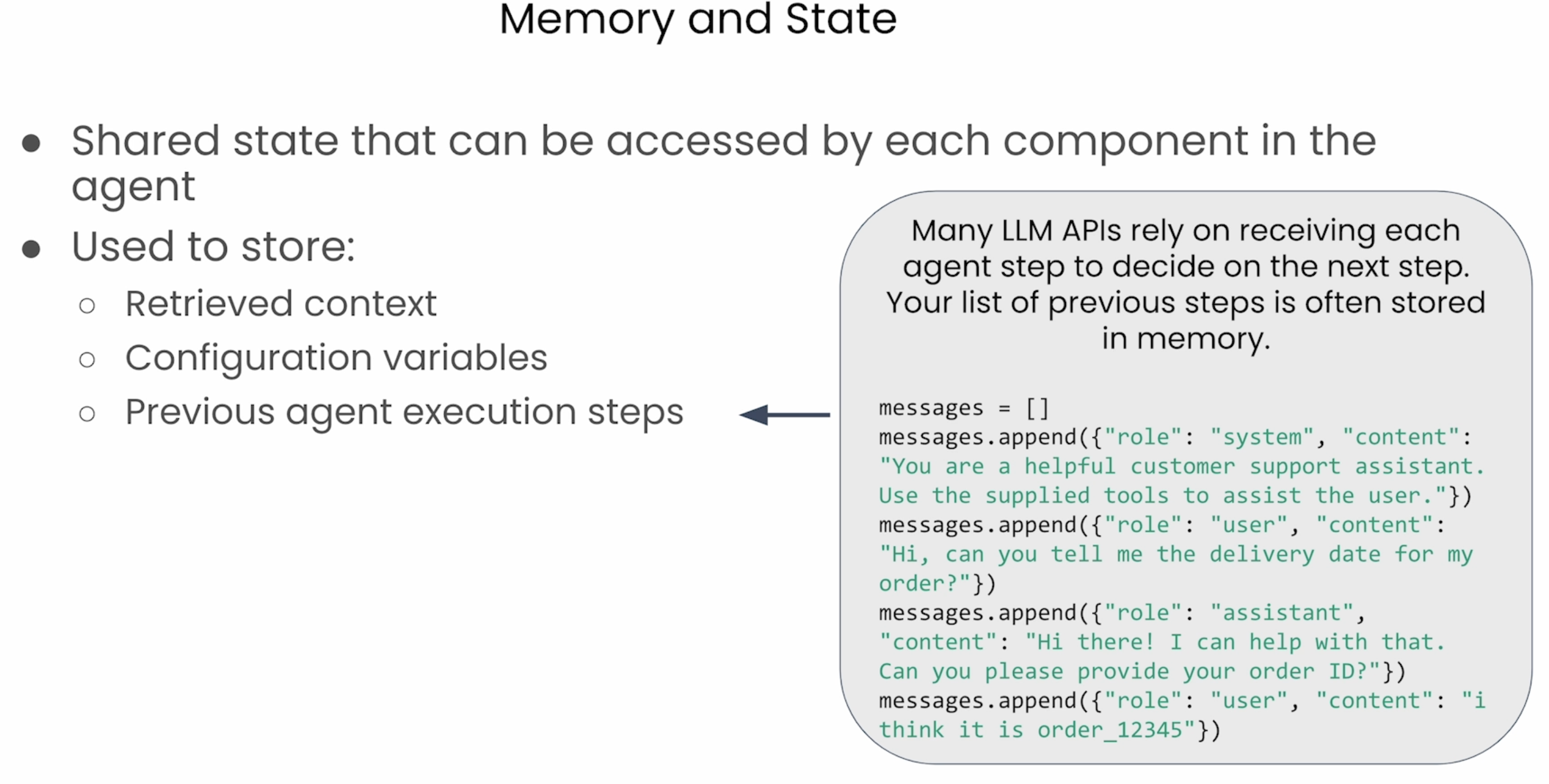

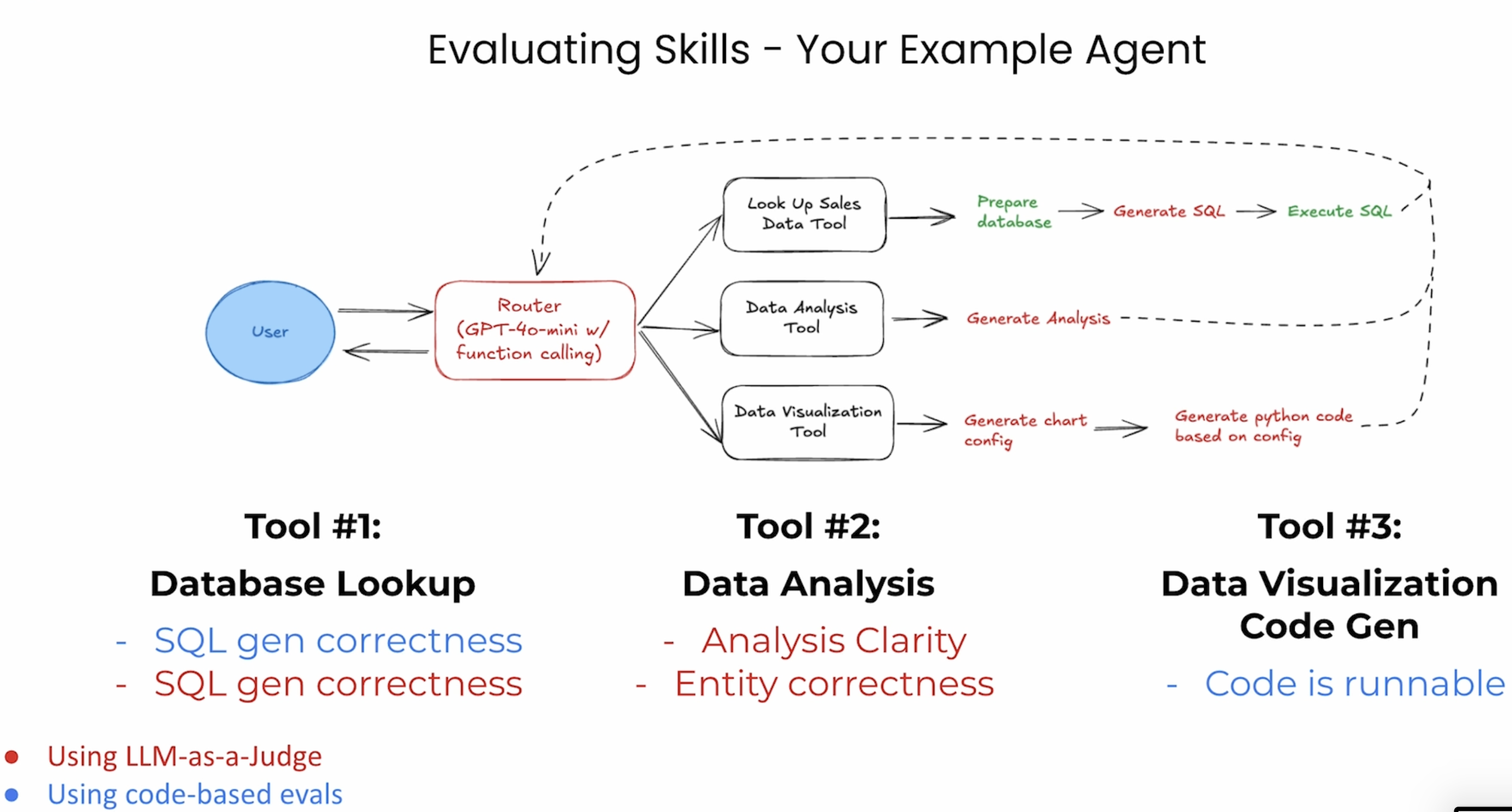

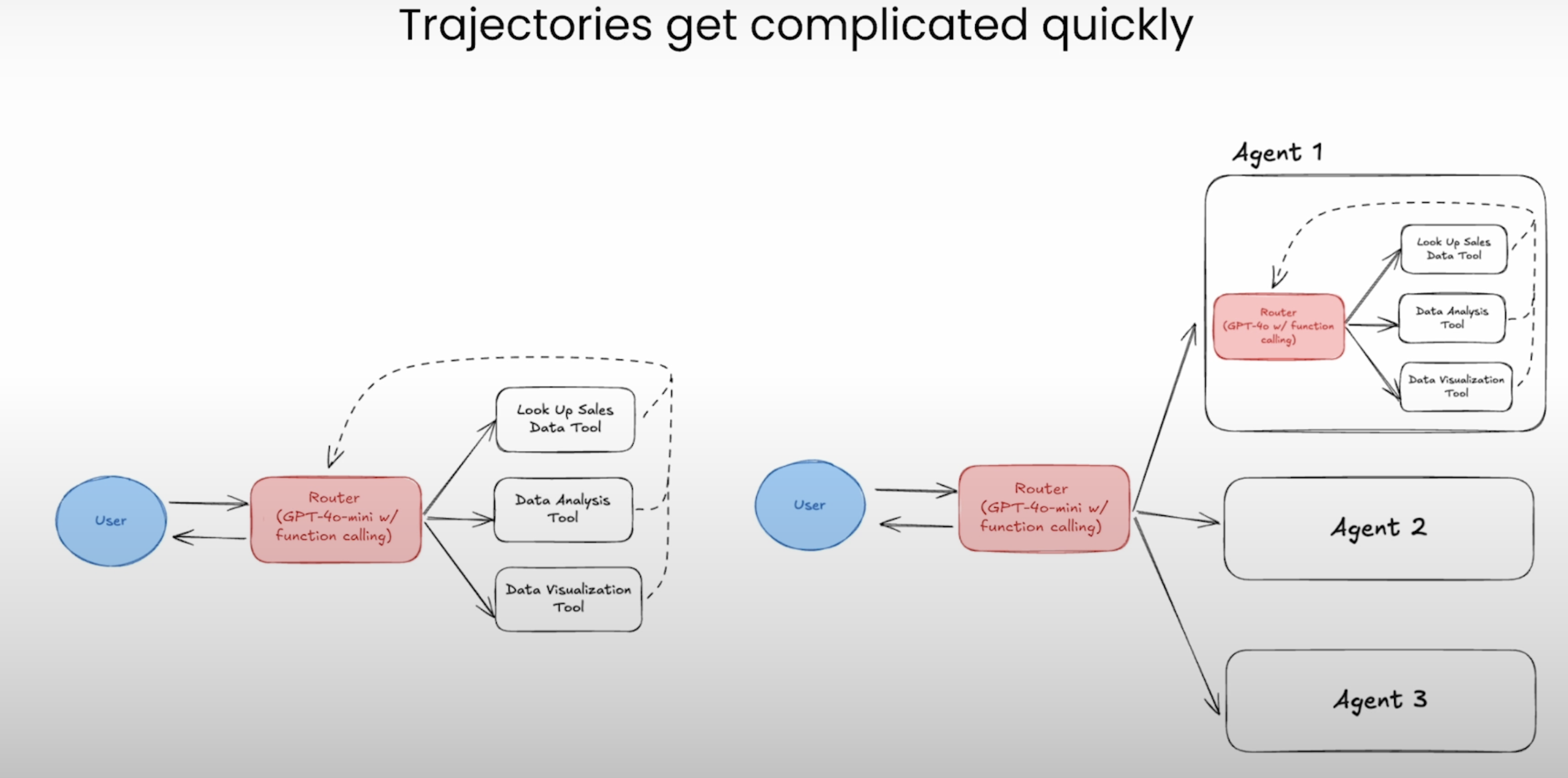

Decomposing agents

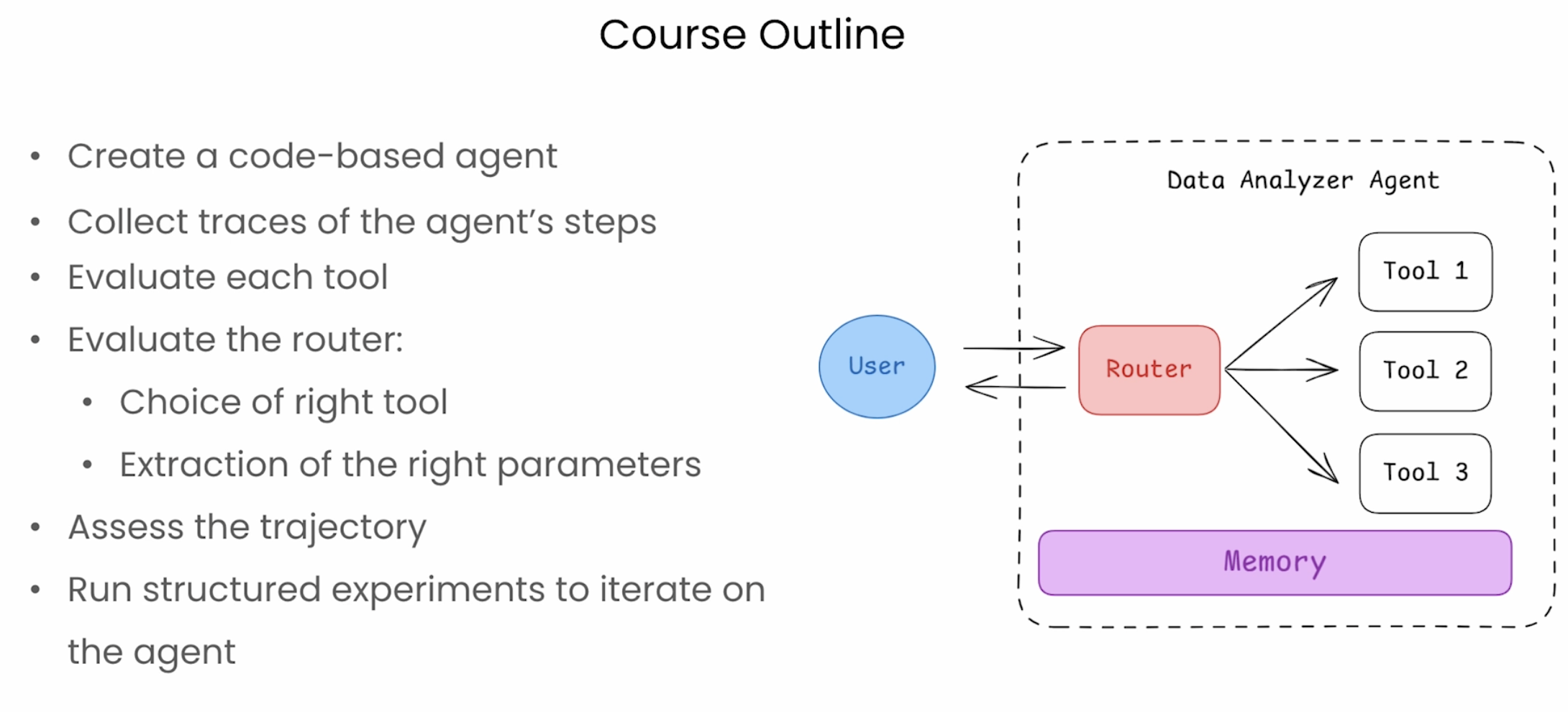

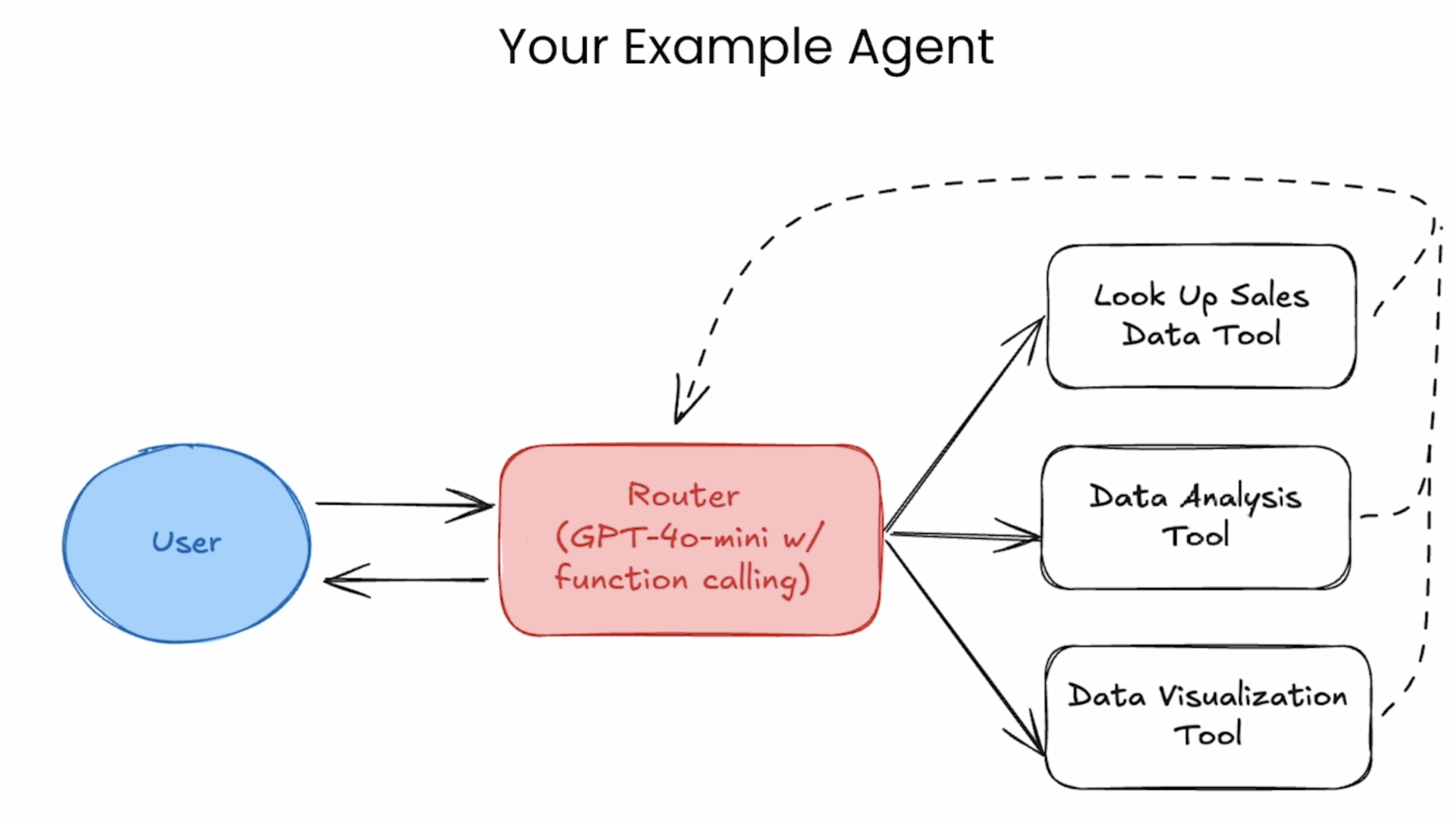

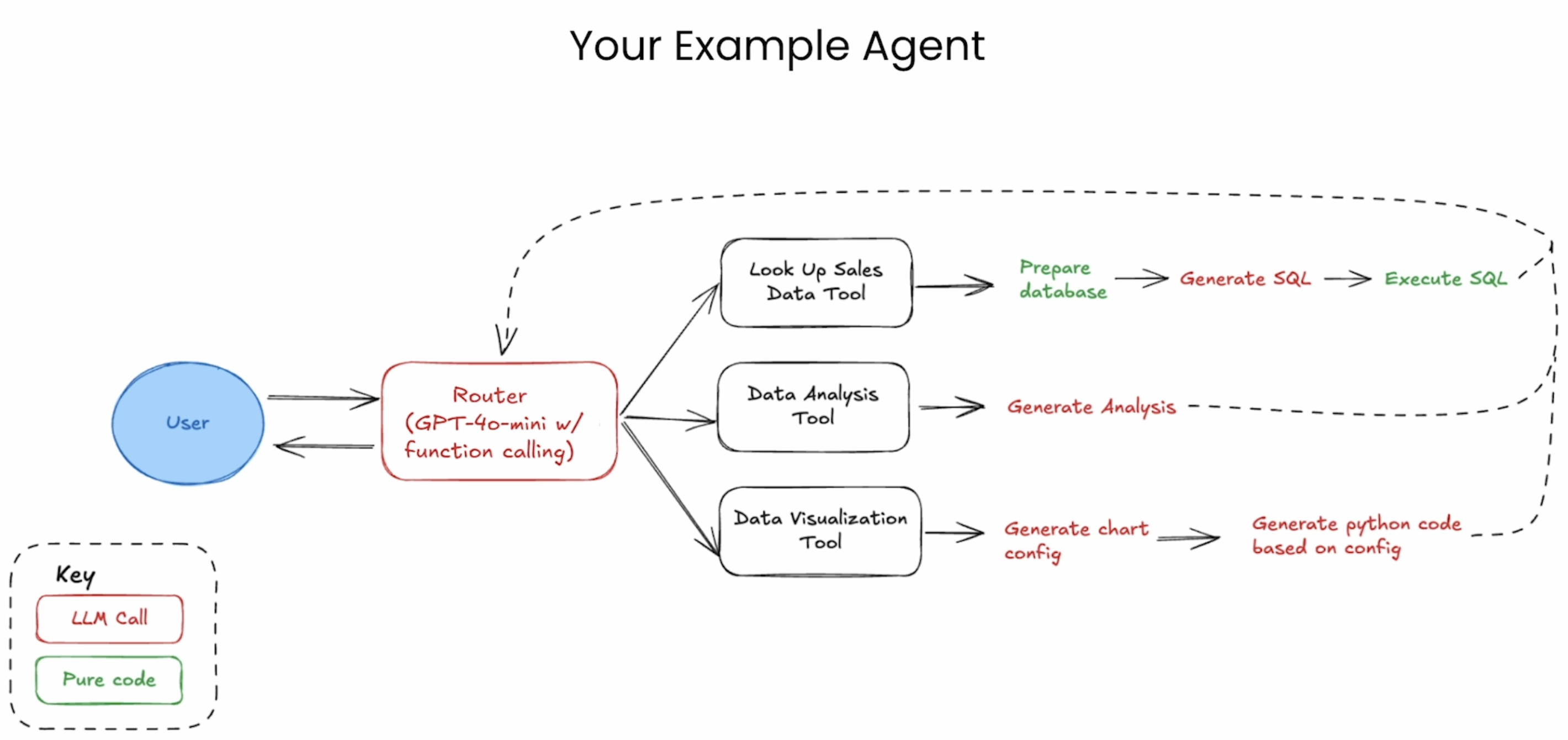

Building your agent

本节实现了一个分析sales report的agent,详情看代码

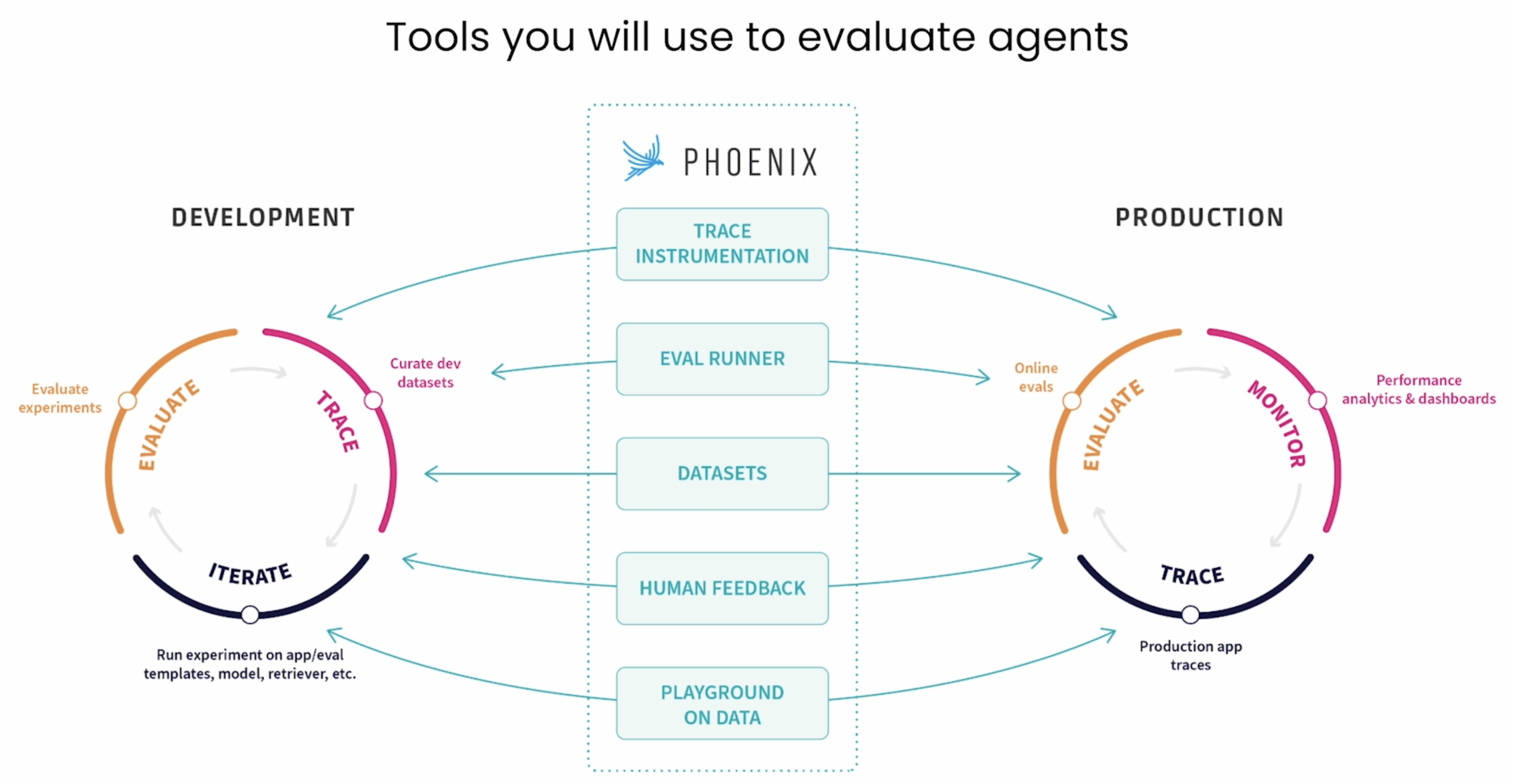

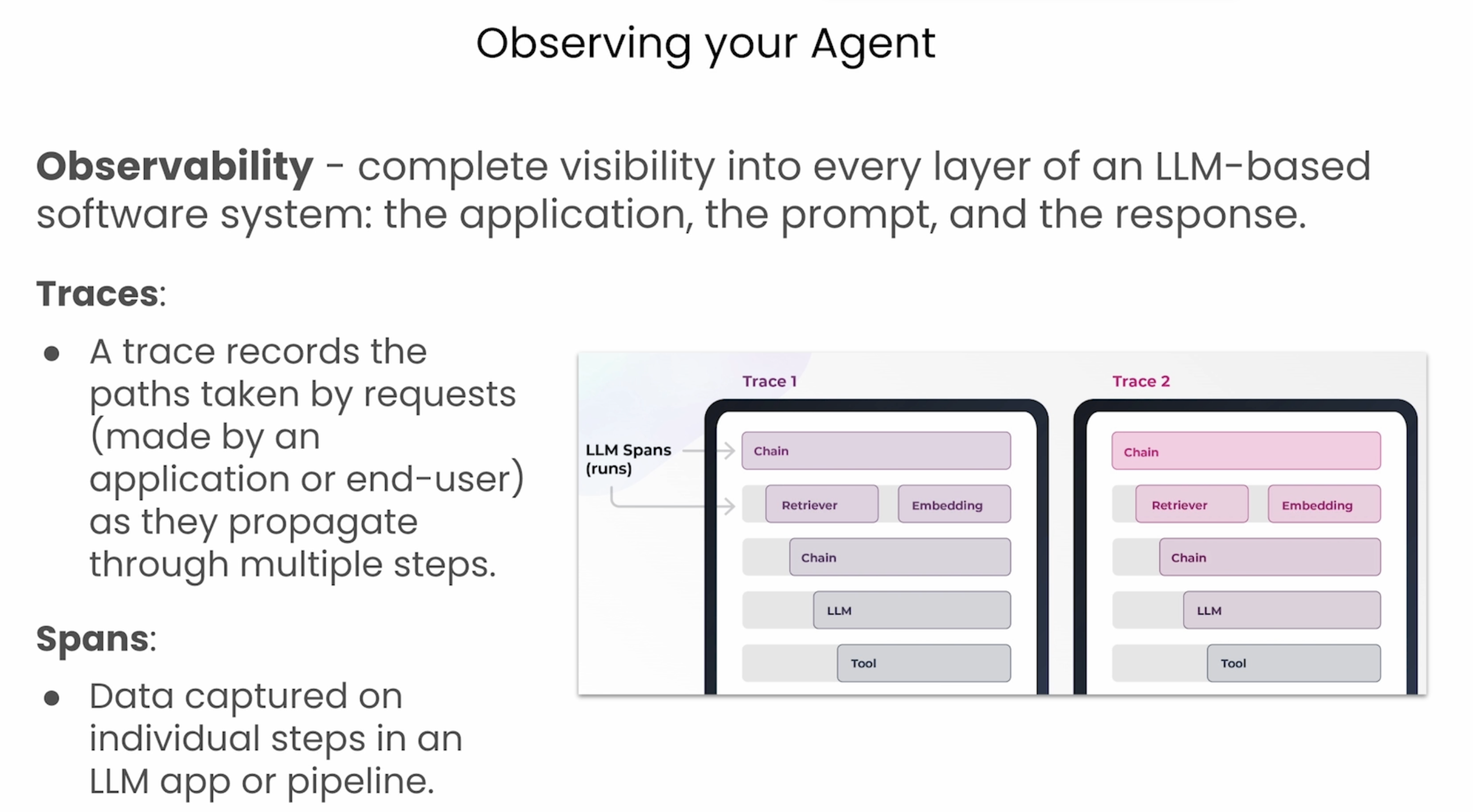

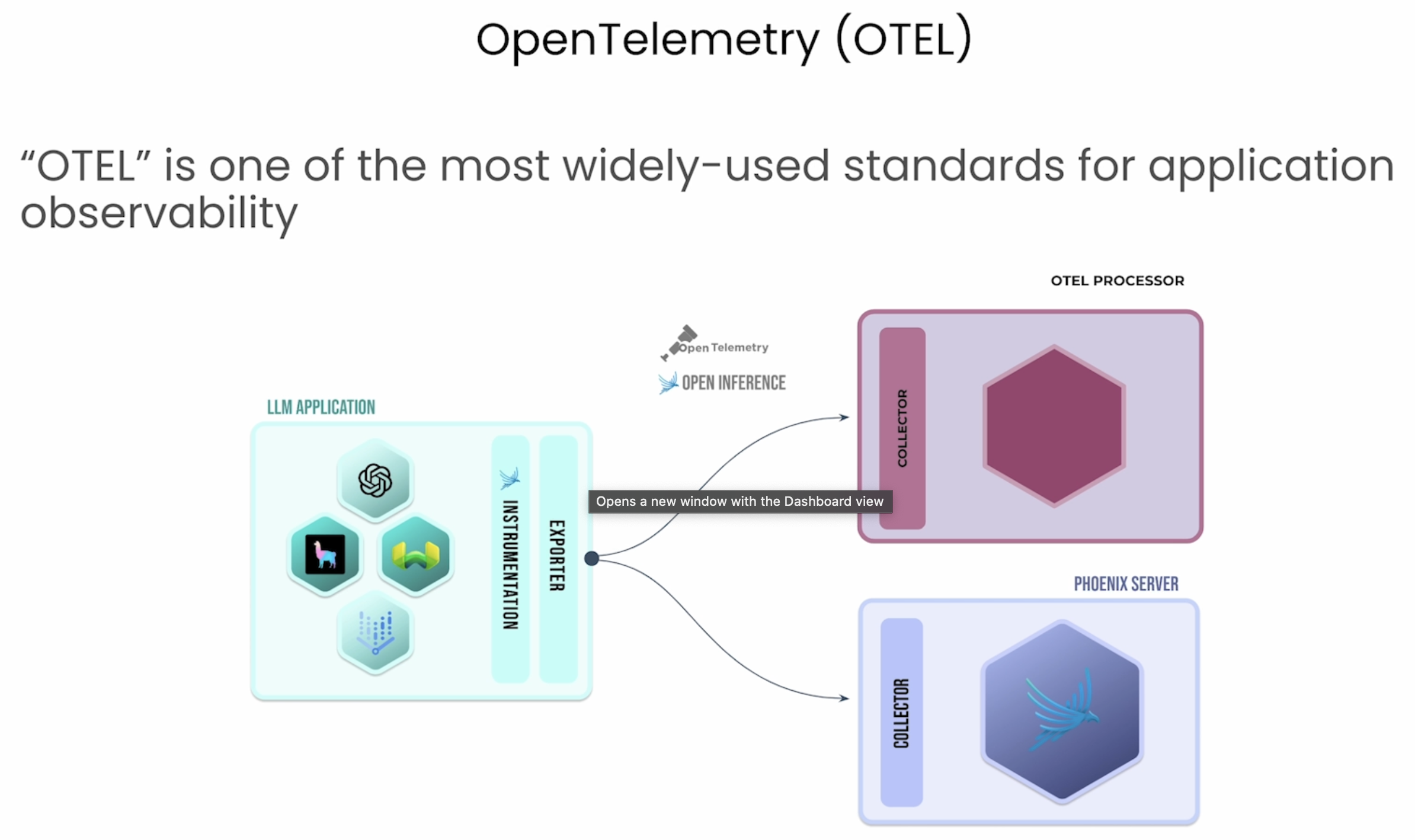

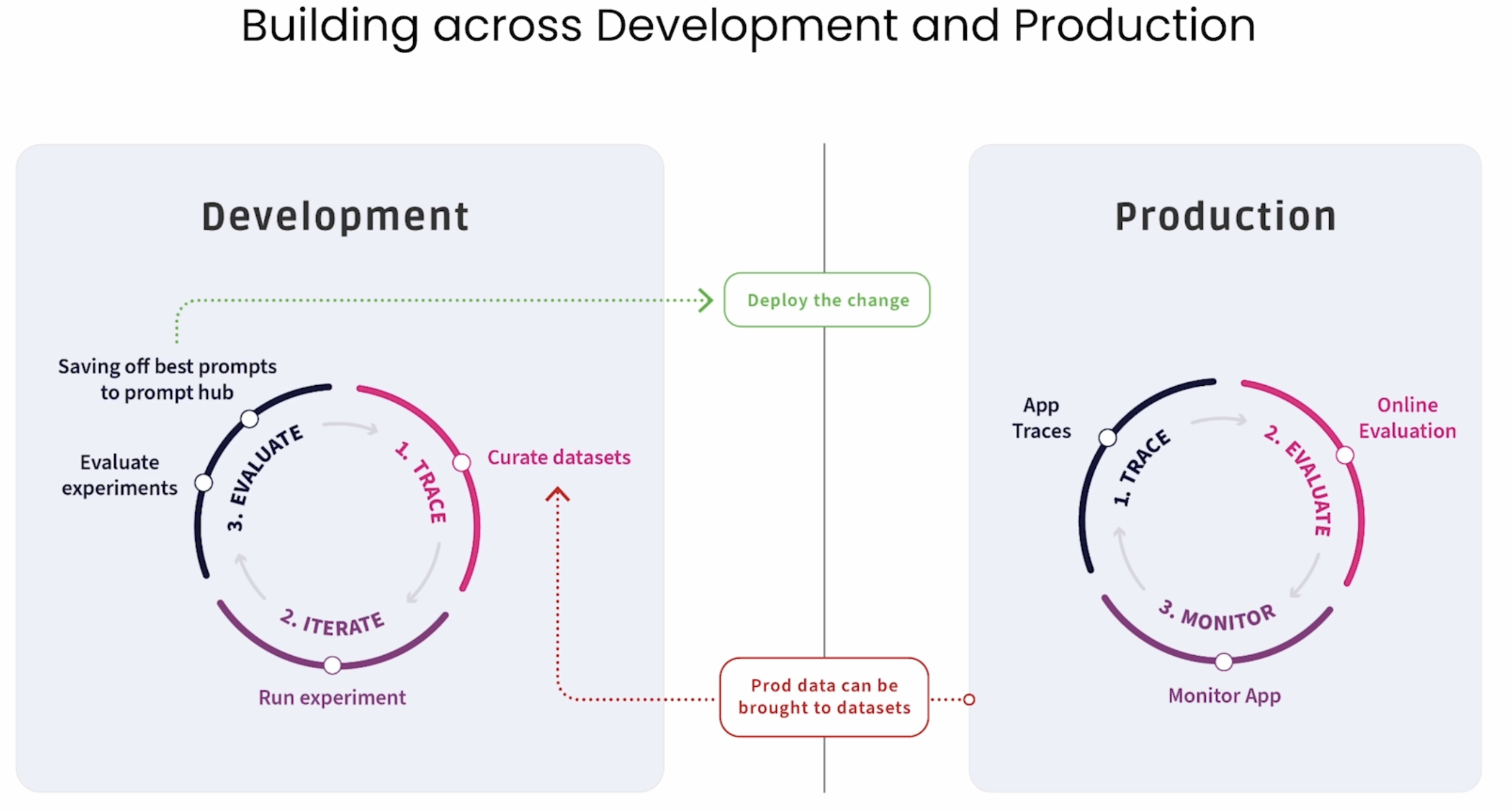

Tracing agents

Tracing your agent

在这节代码实现了如何用Phoenix框架进行agent的tracing。

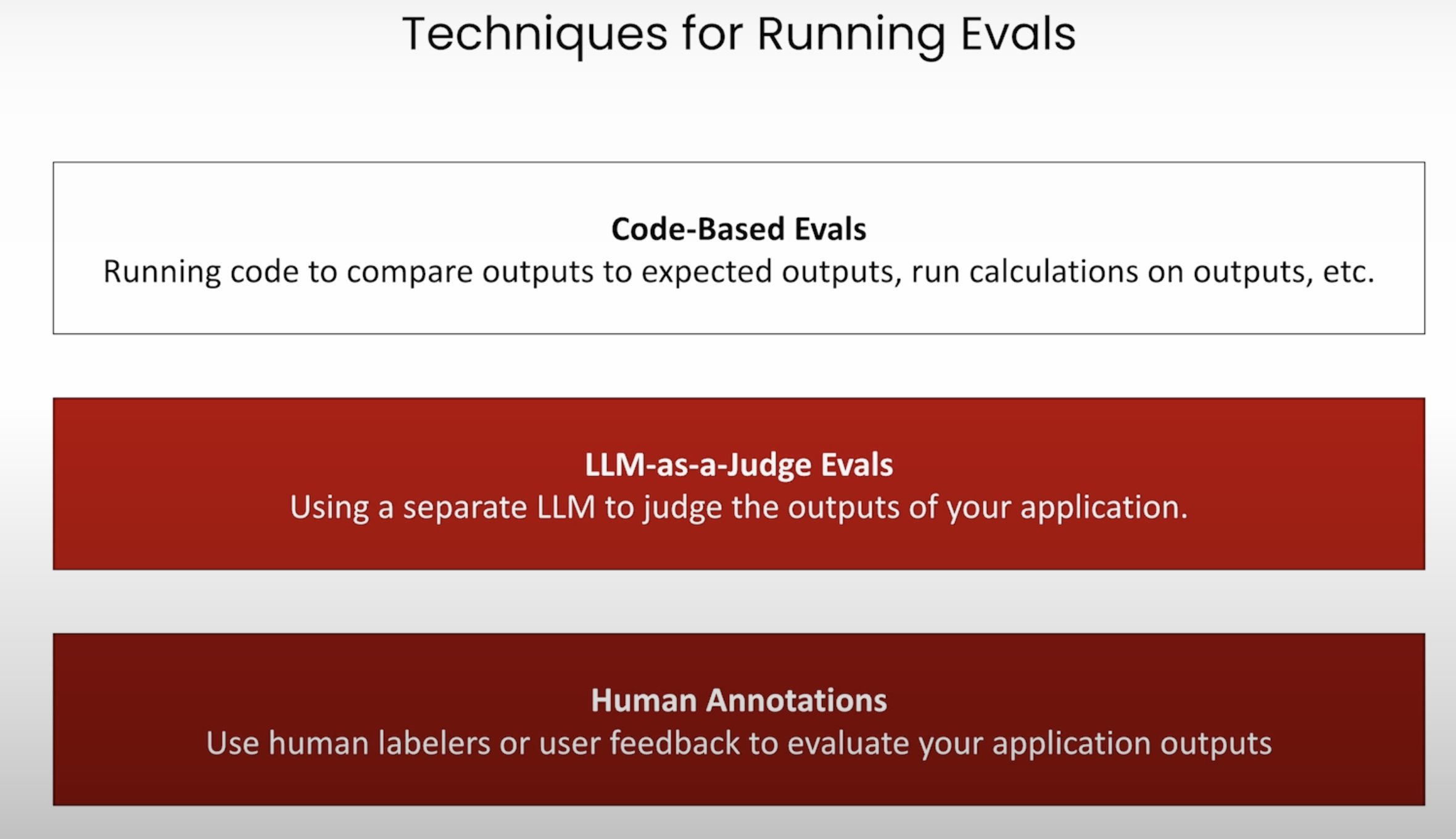

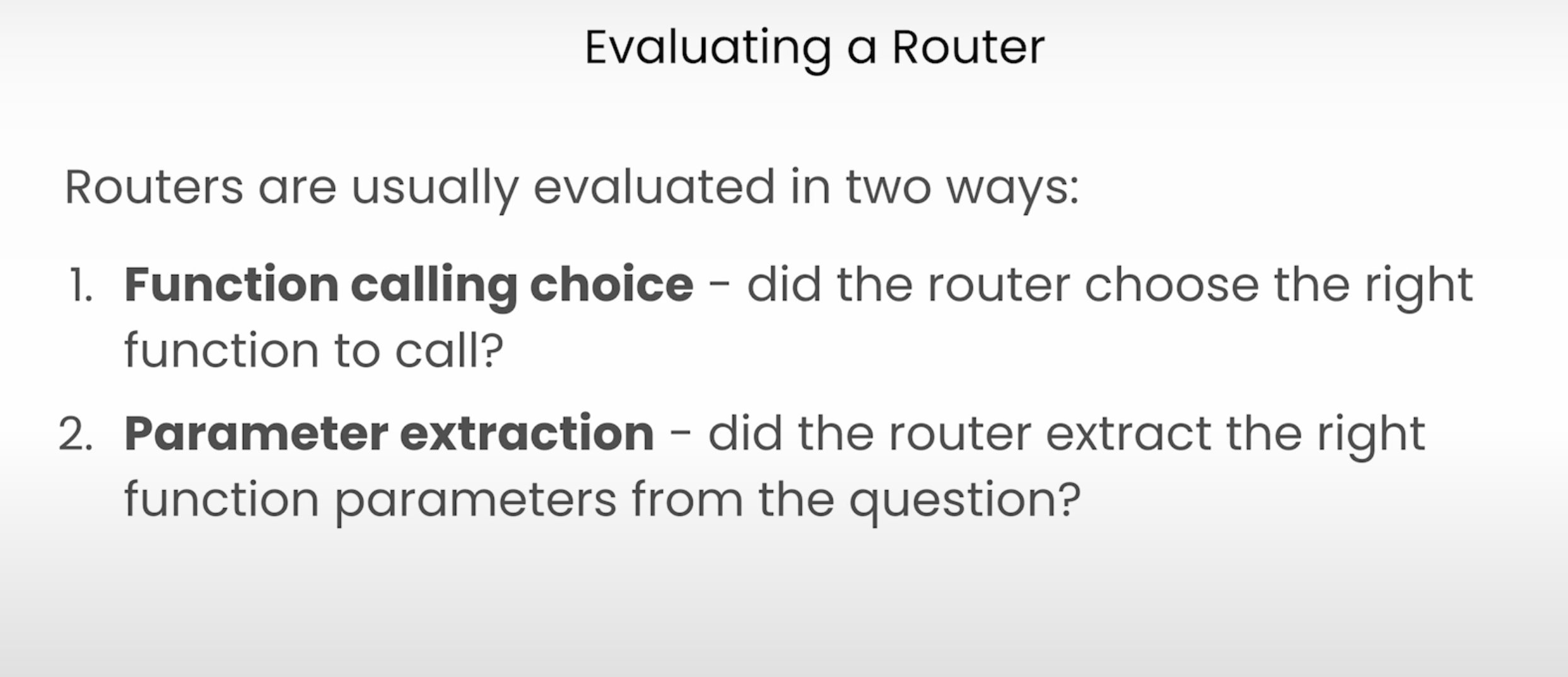

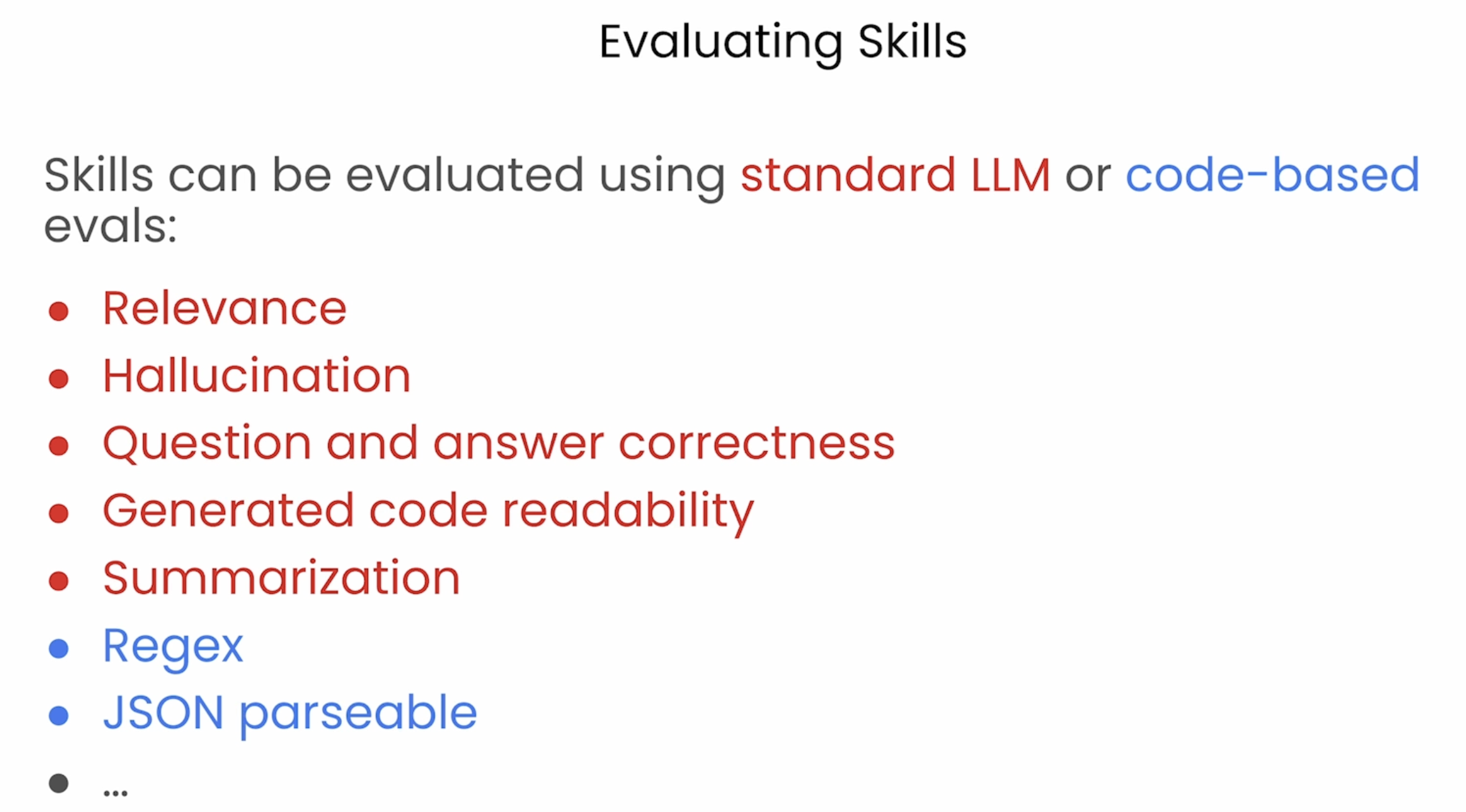

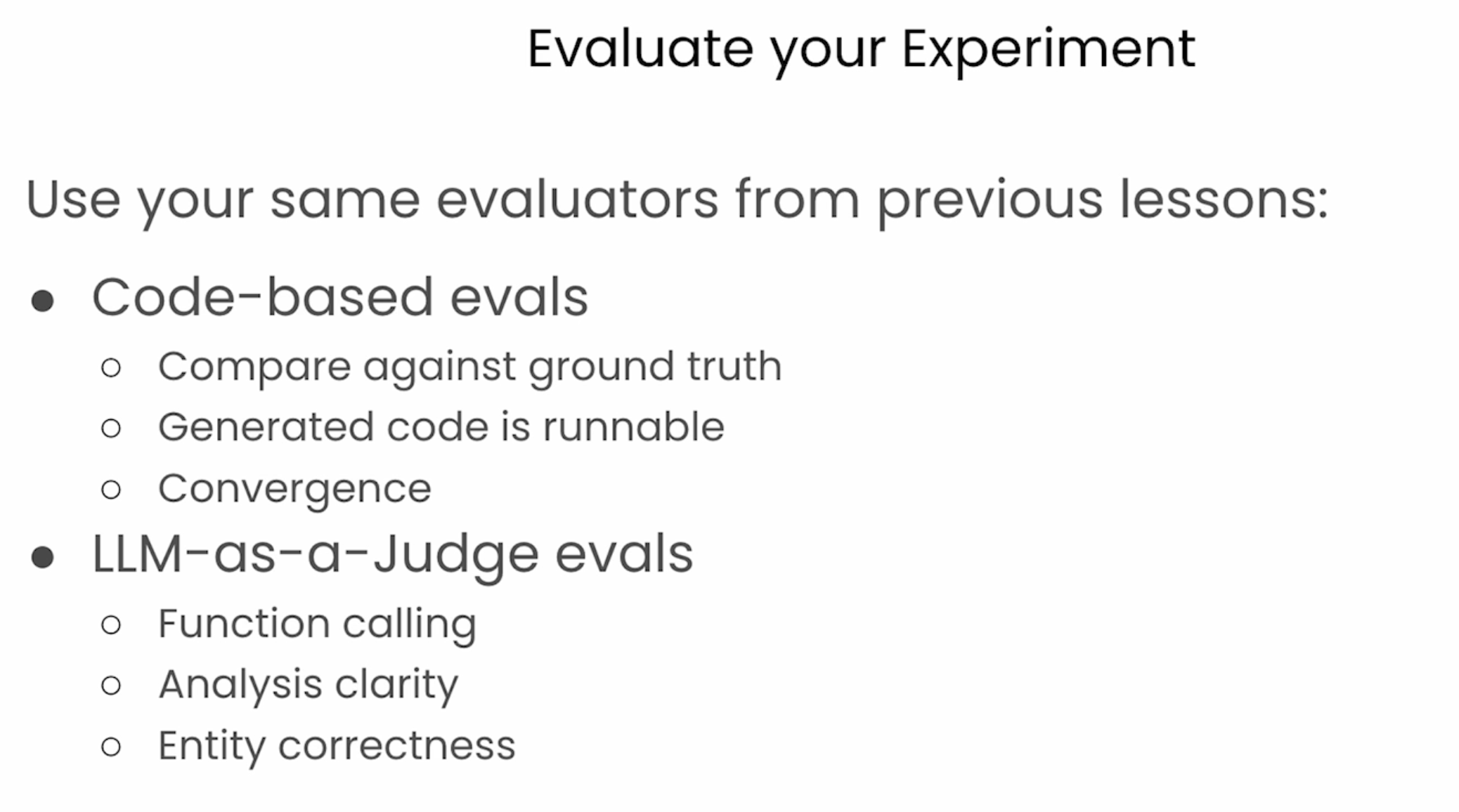

Adding router and skill evaluations

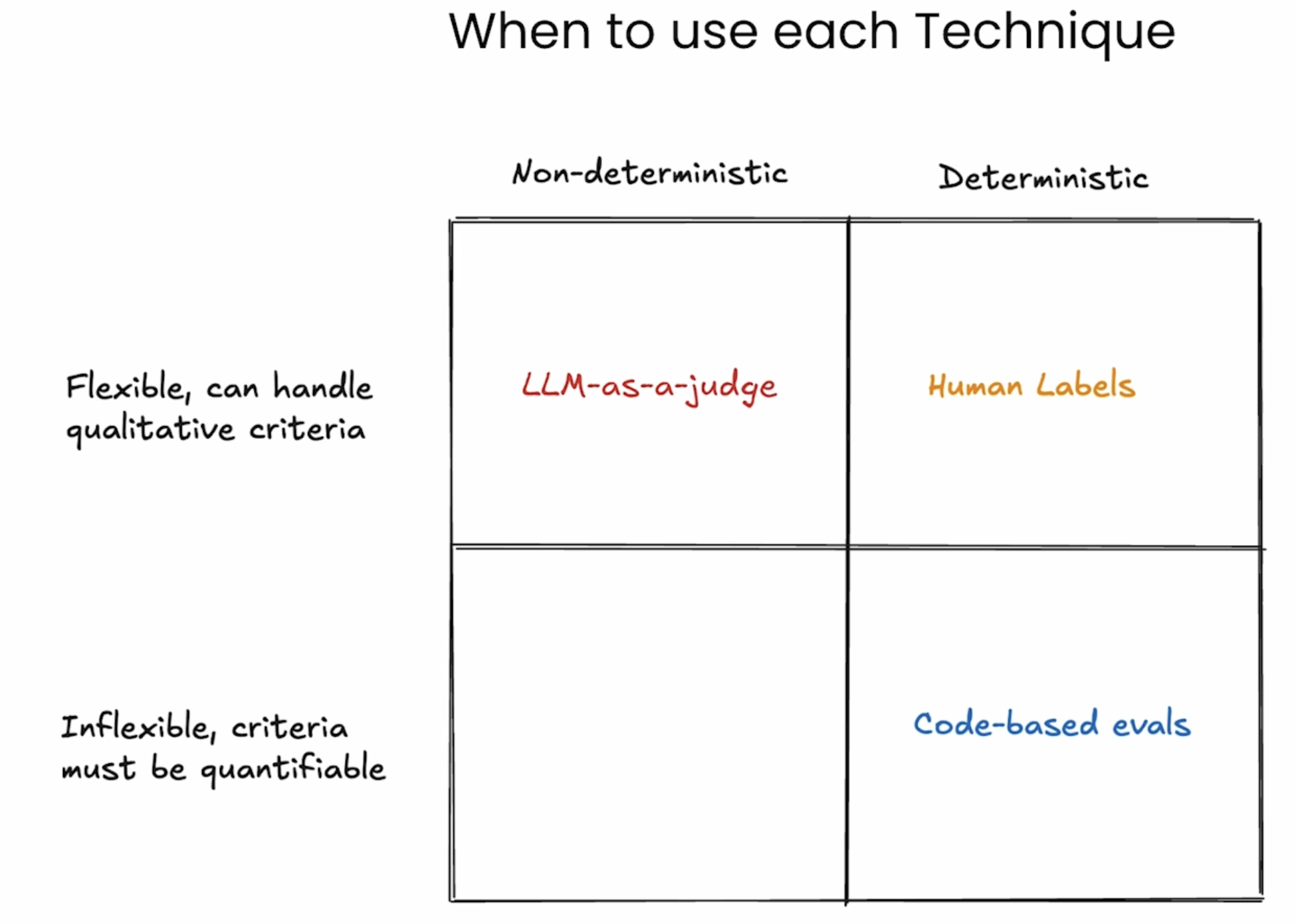

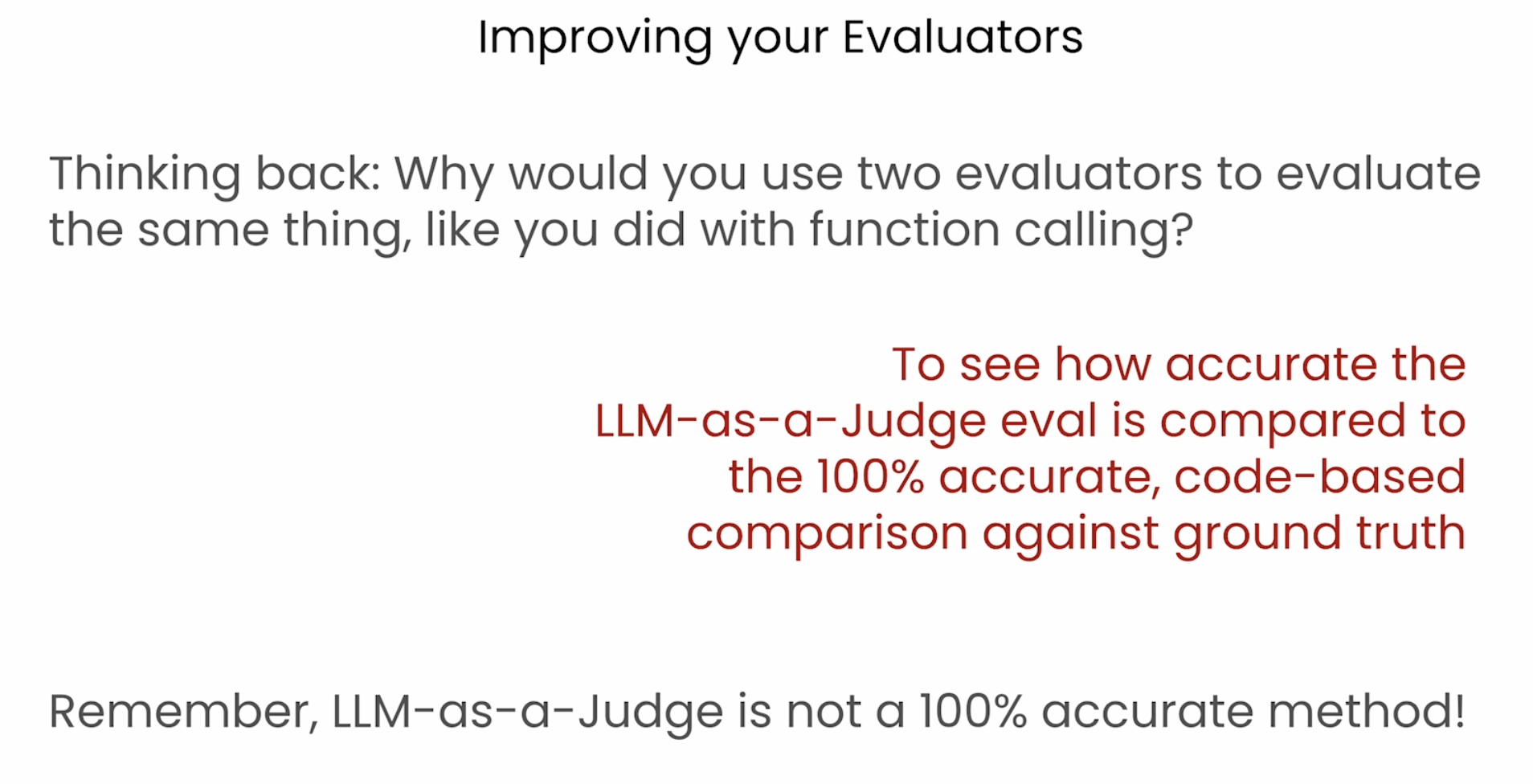

Code-Based

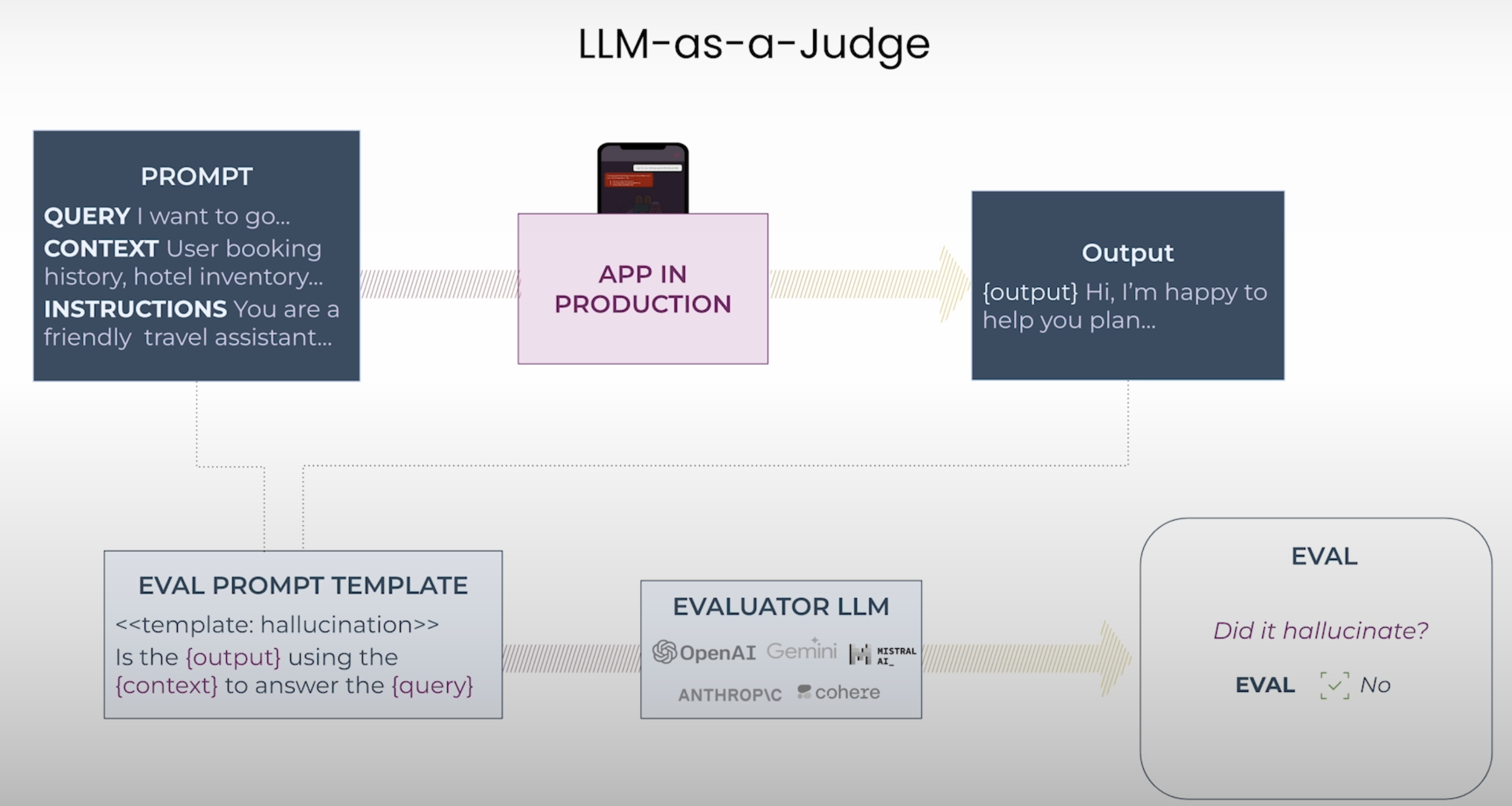

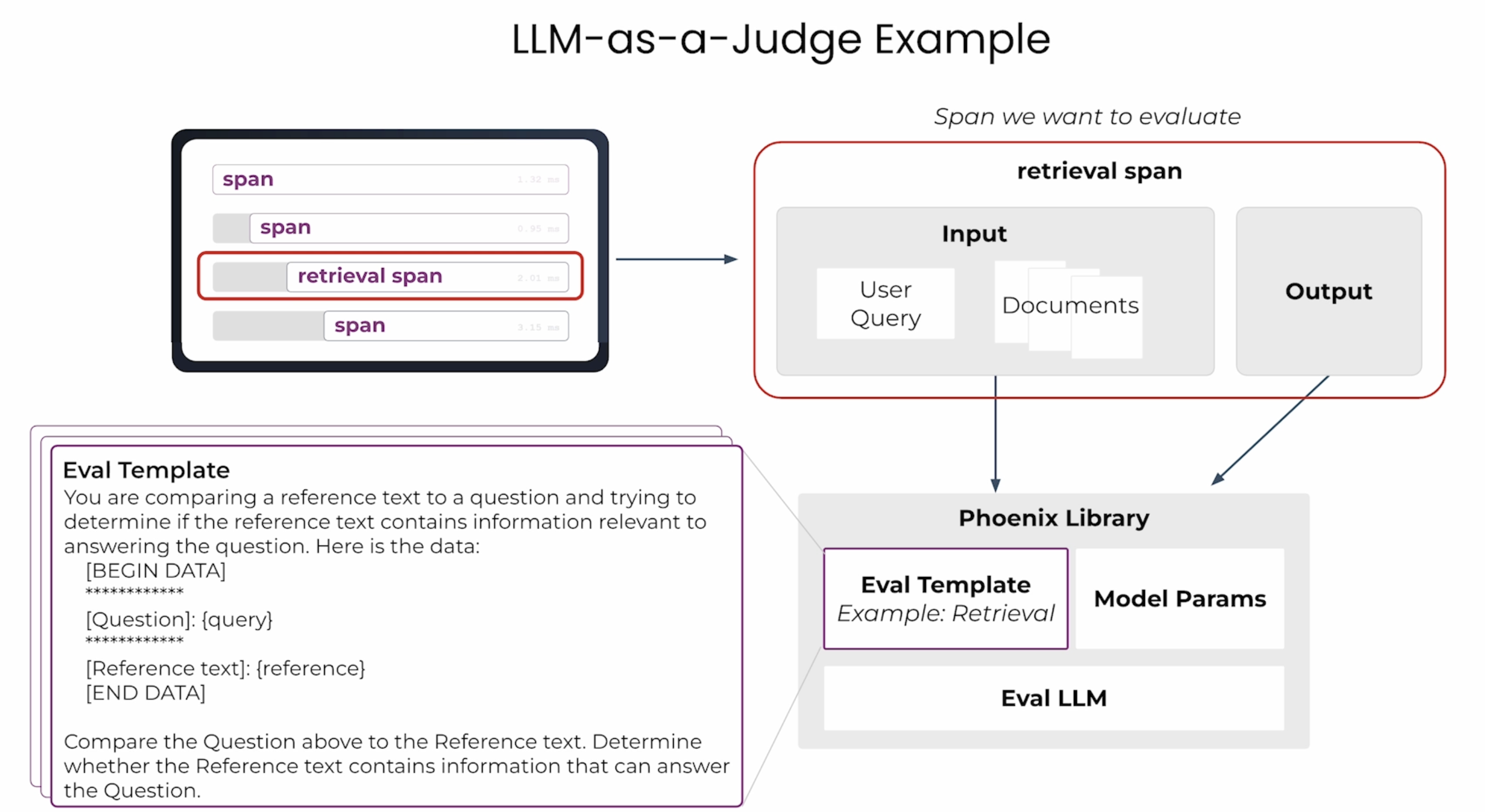

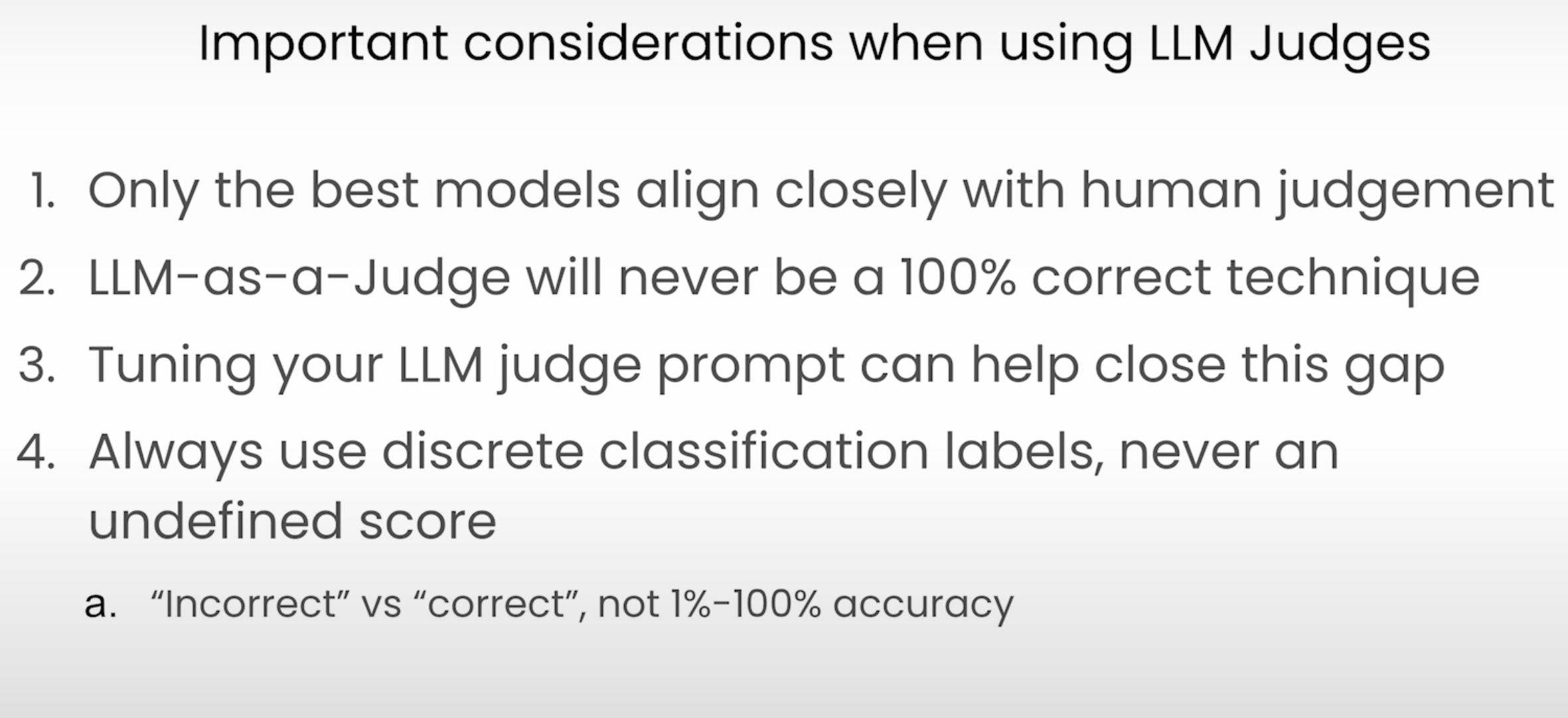

LLM-Judge

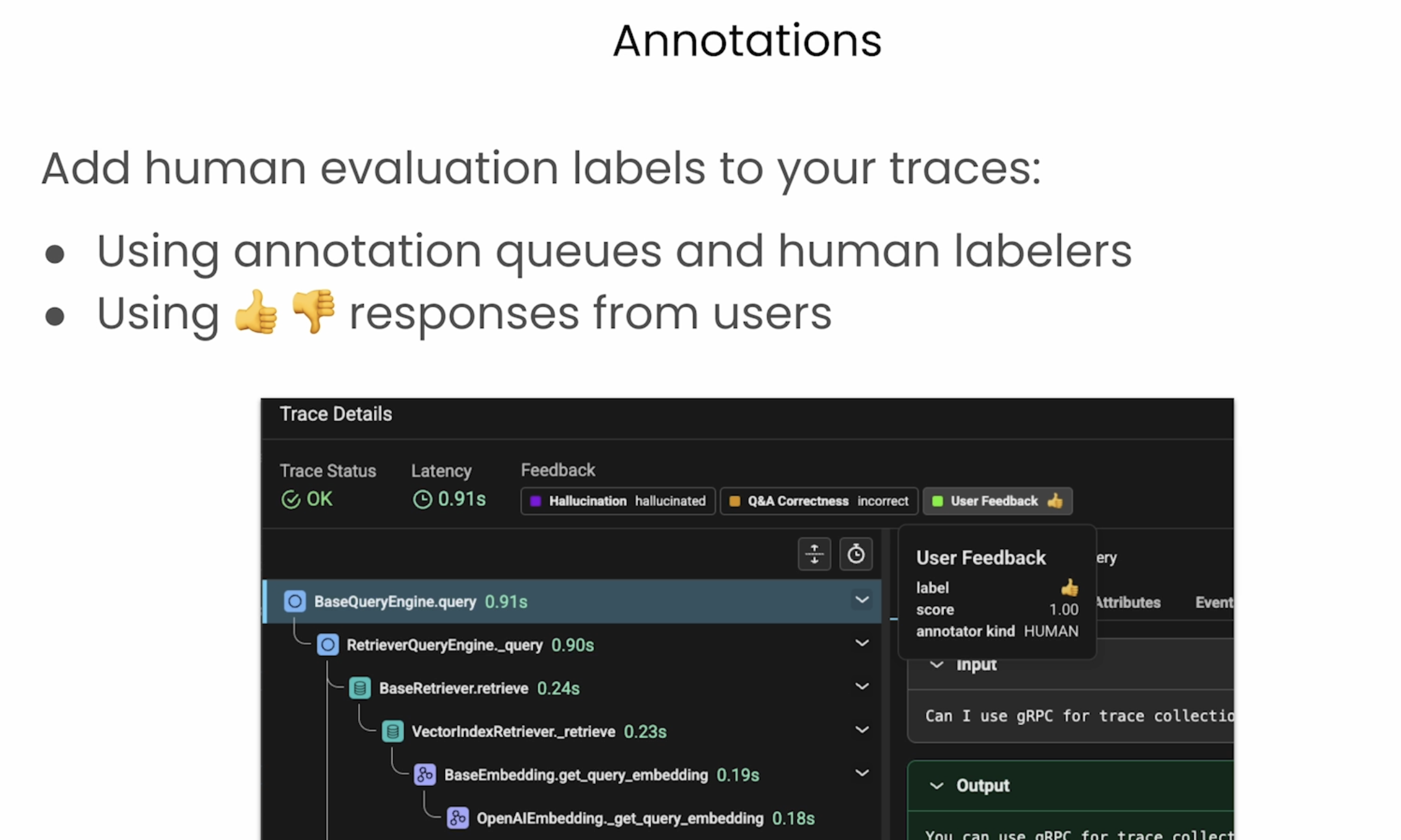

Annotations

Evaluating

Adding trajectory evaluations

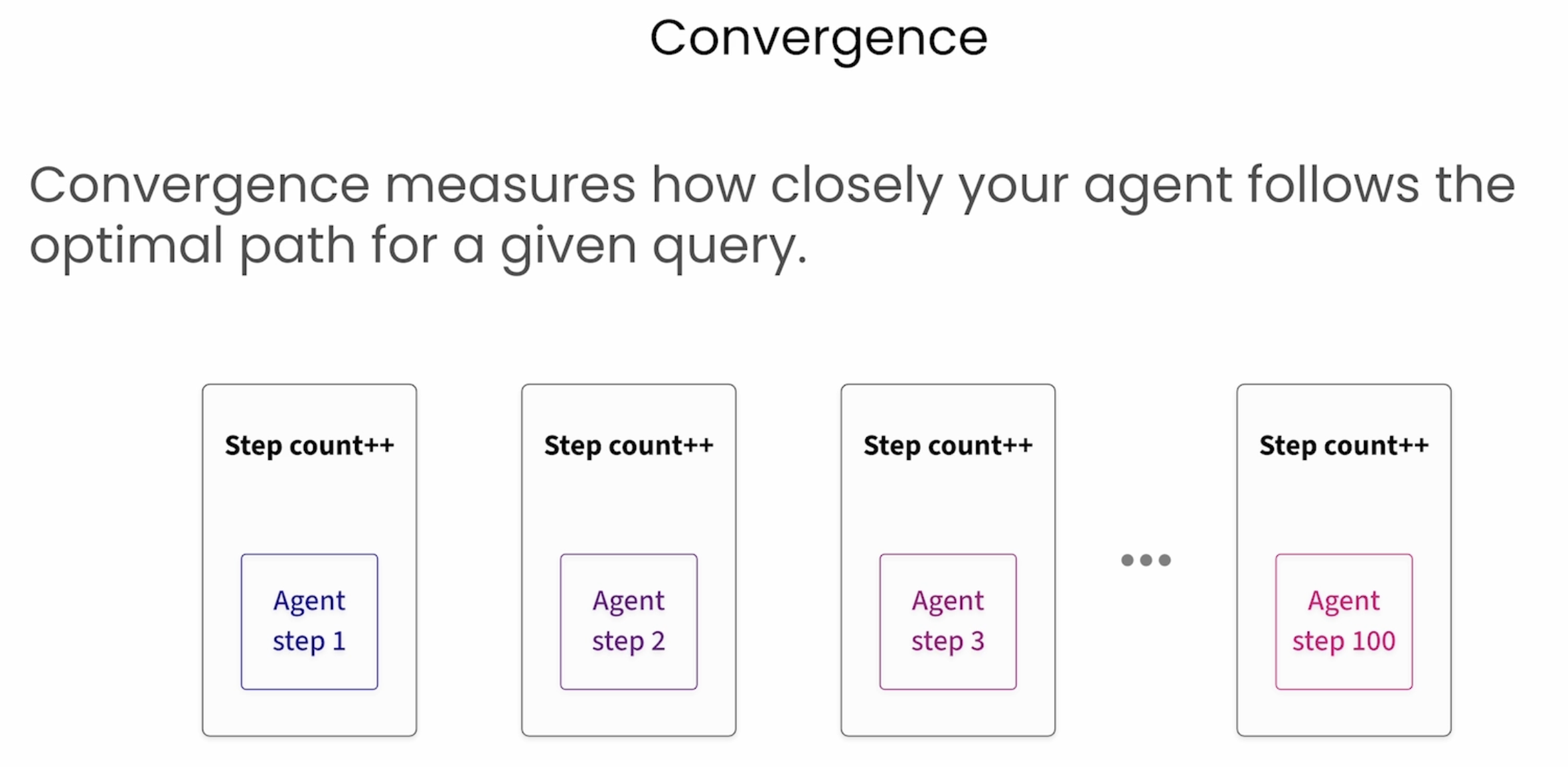

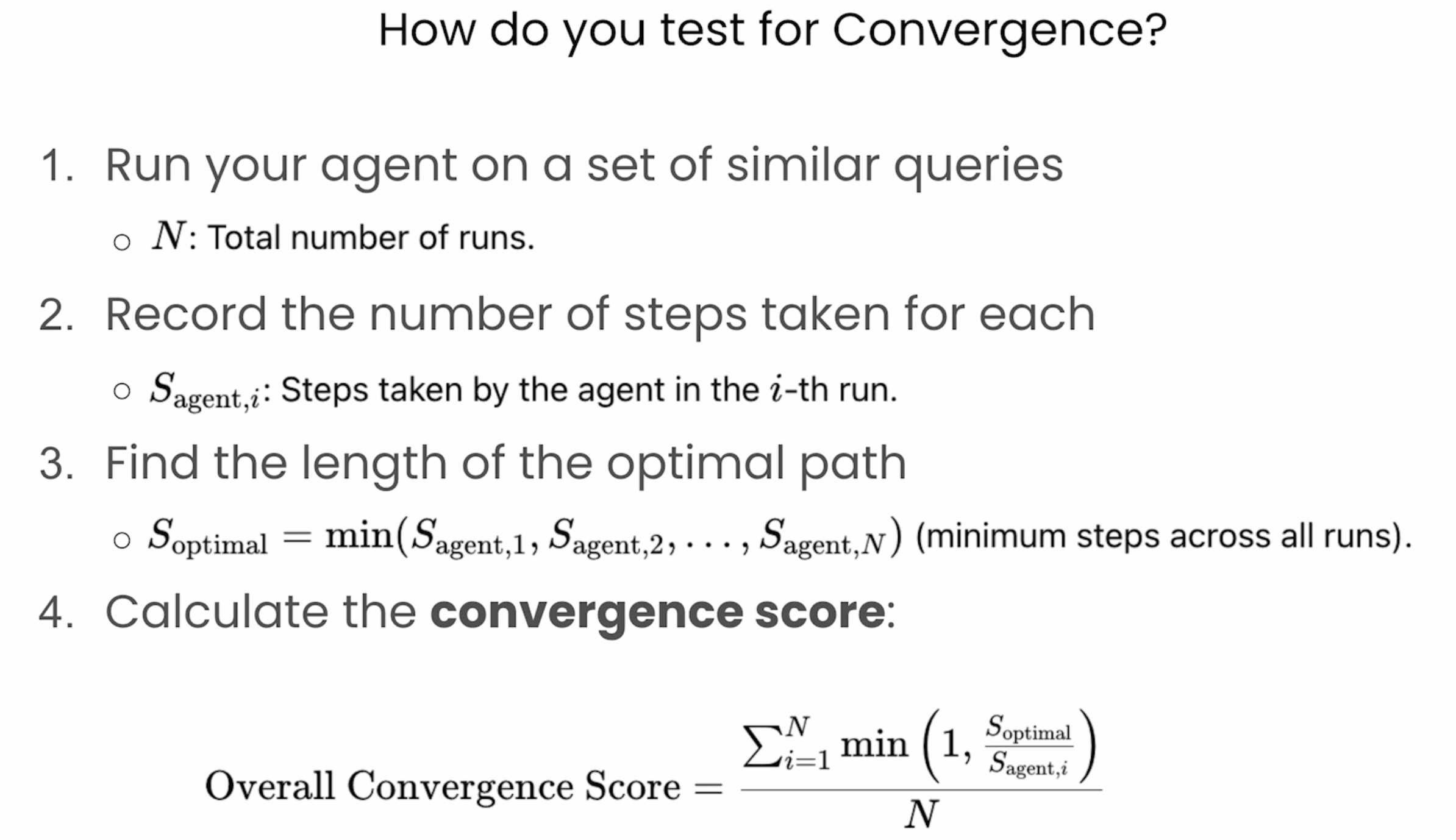

Convergence

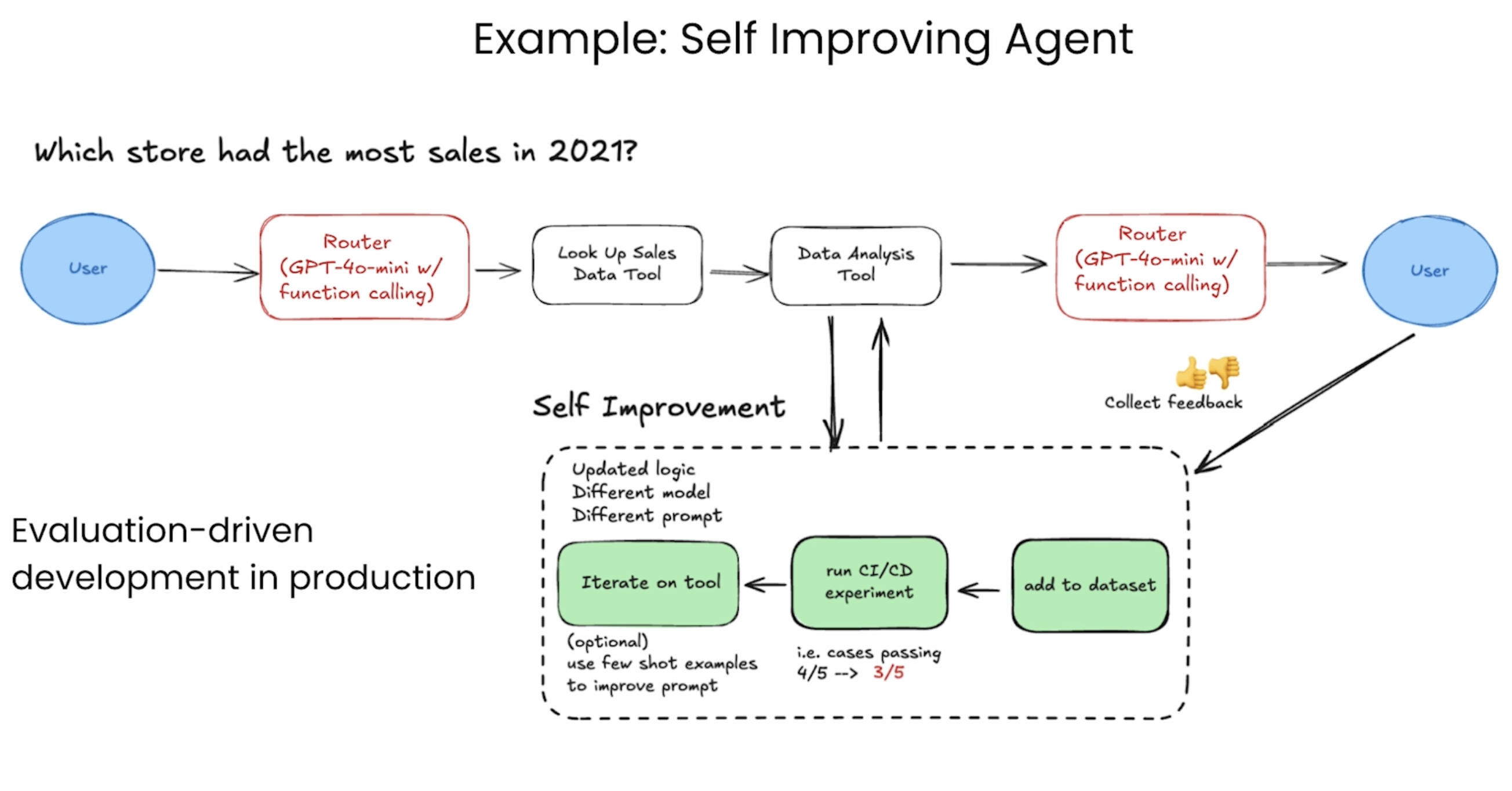

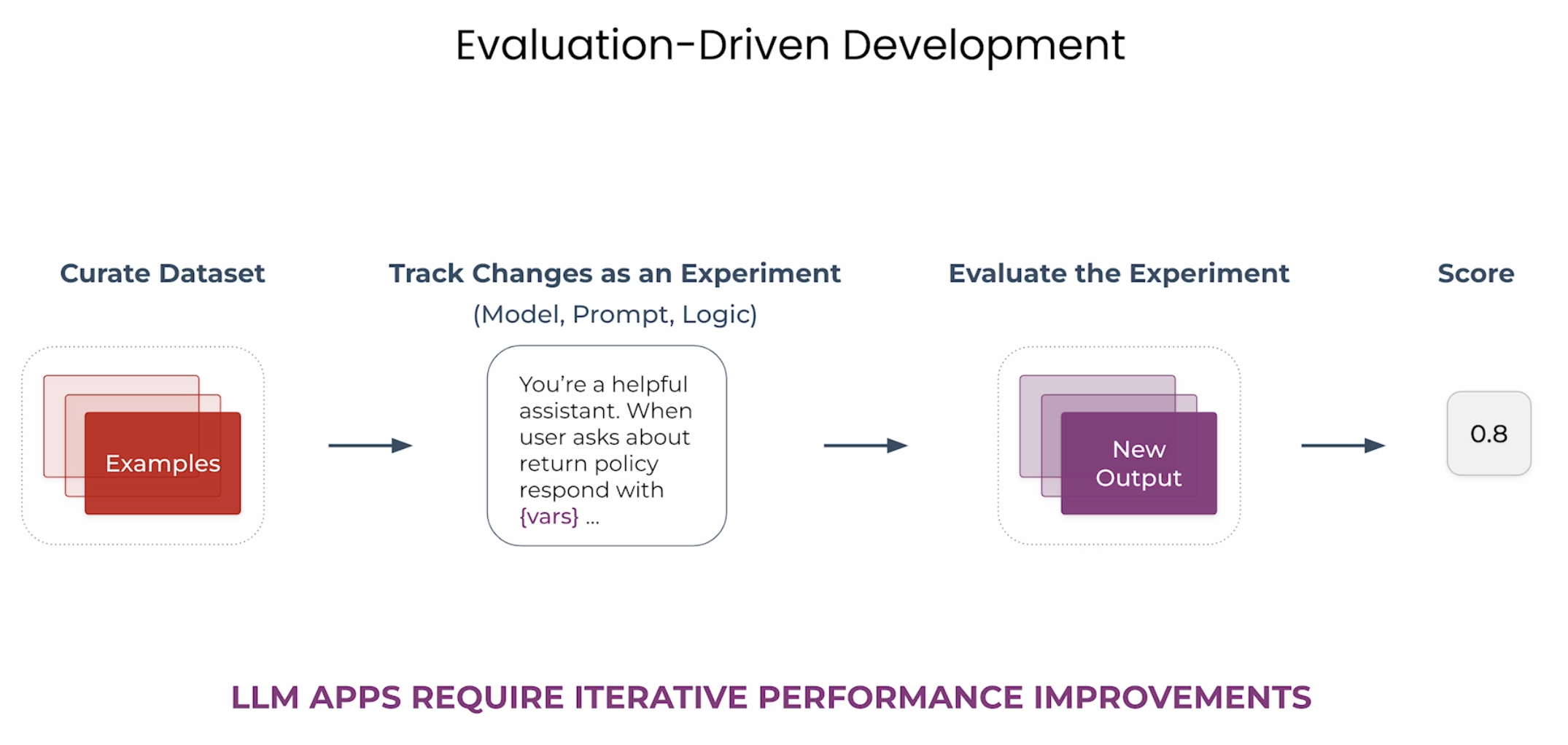

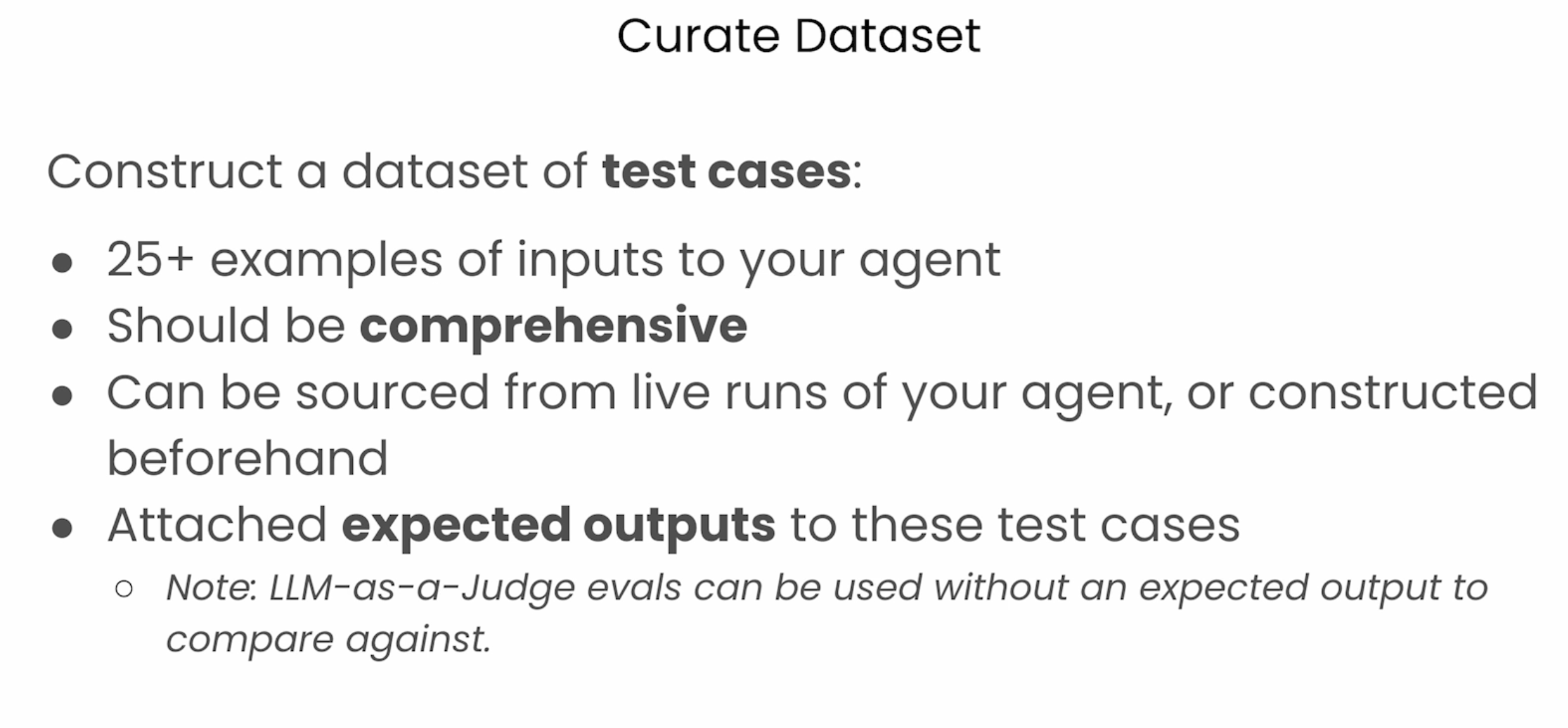

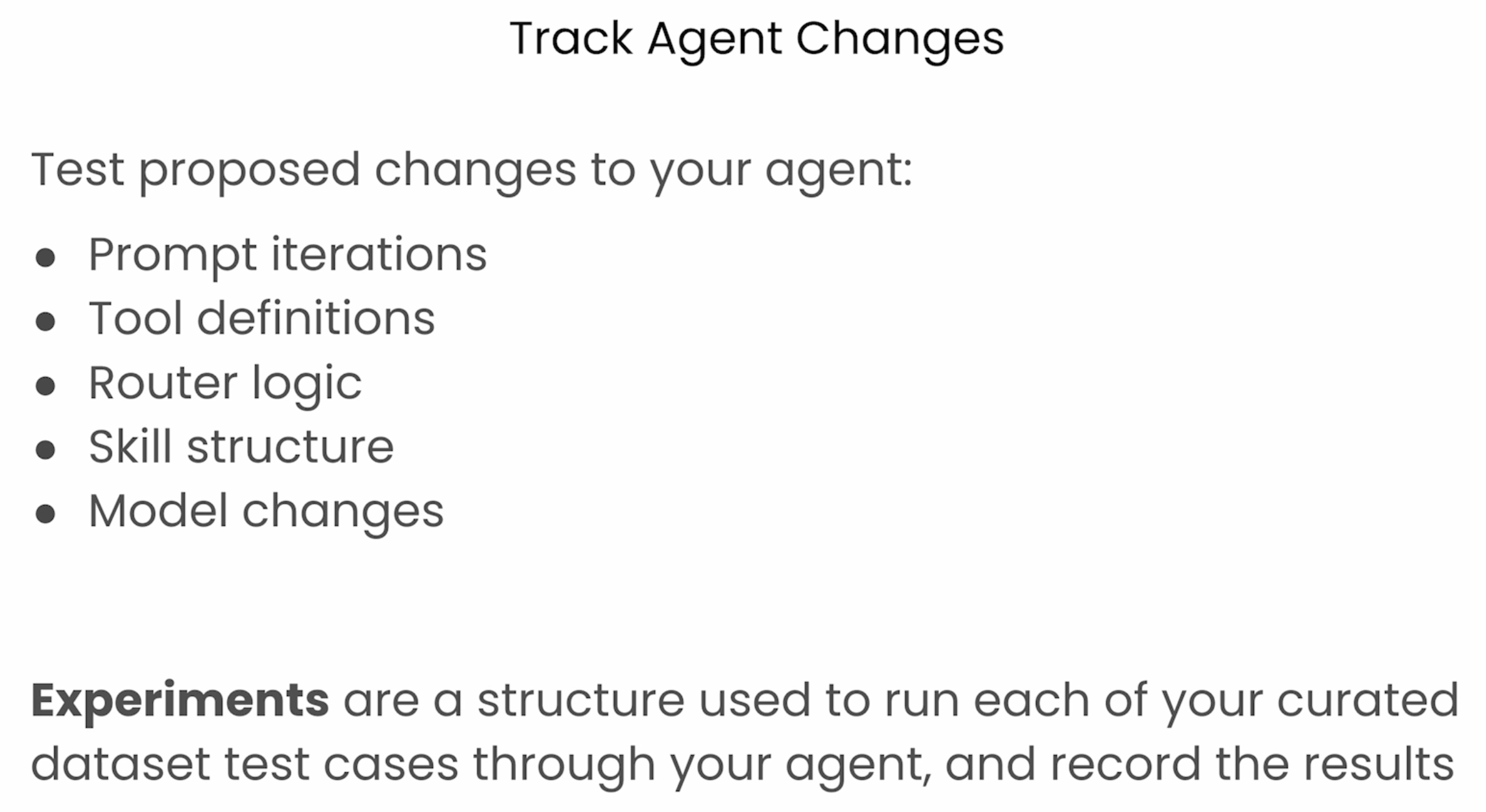

Adding structure to your evaluations

Improving your LLM-as-a-judge

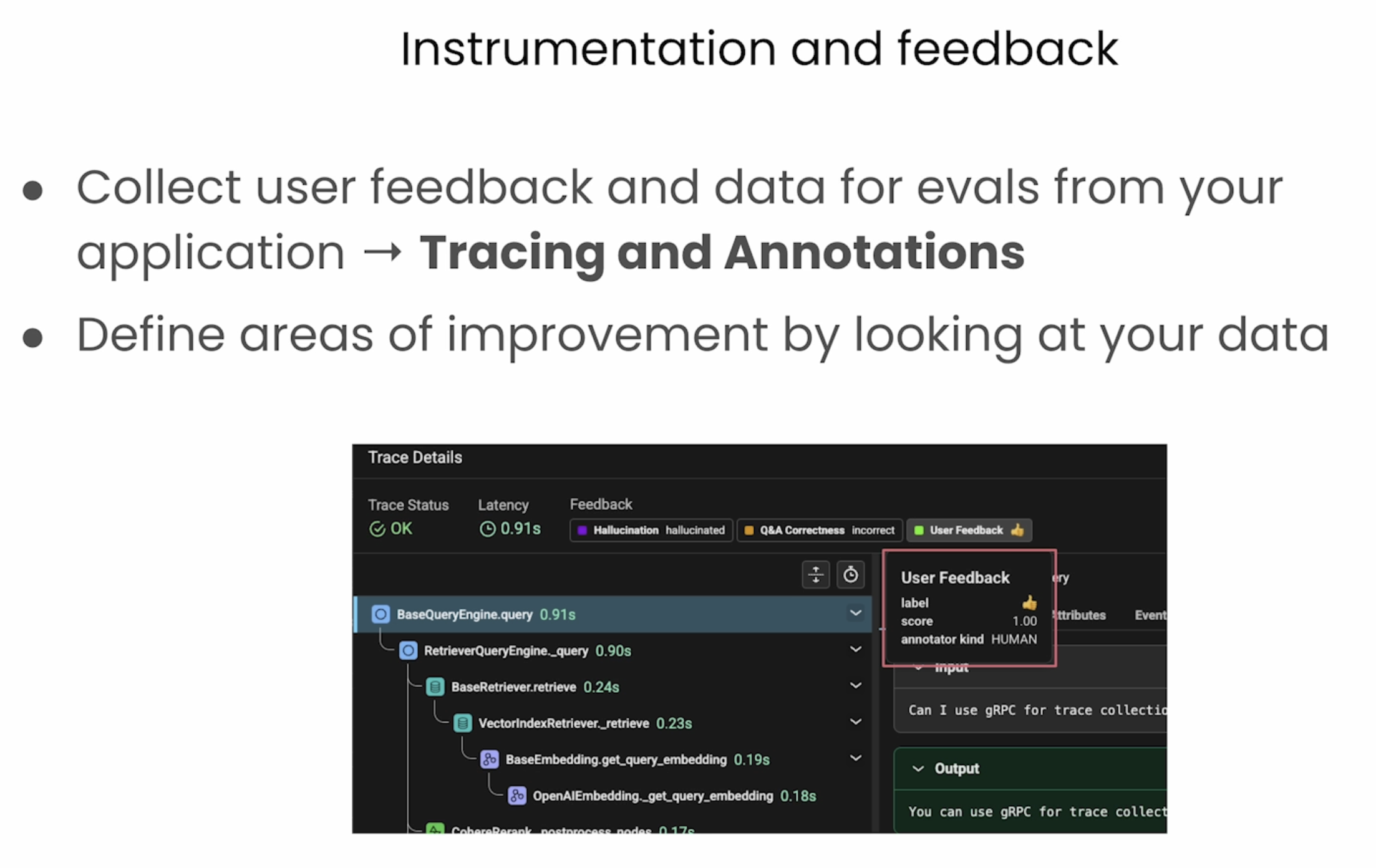

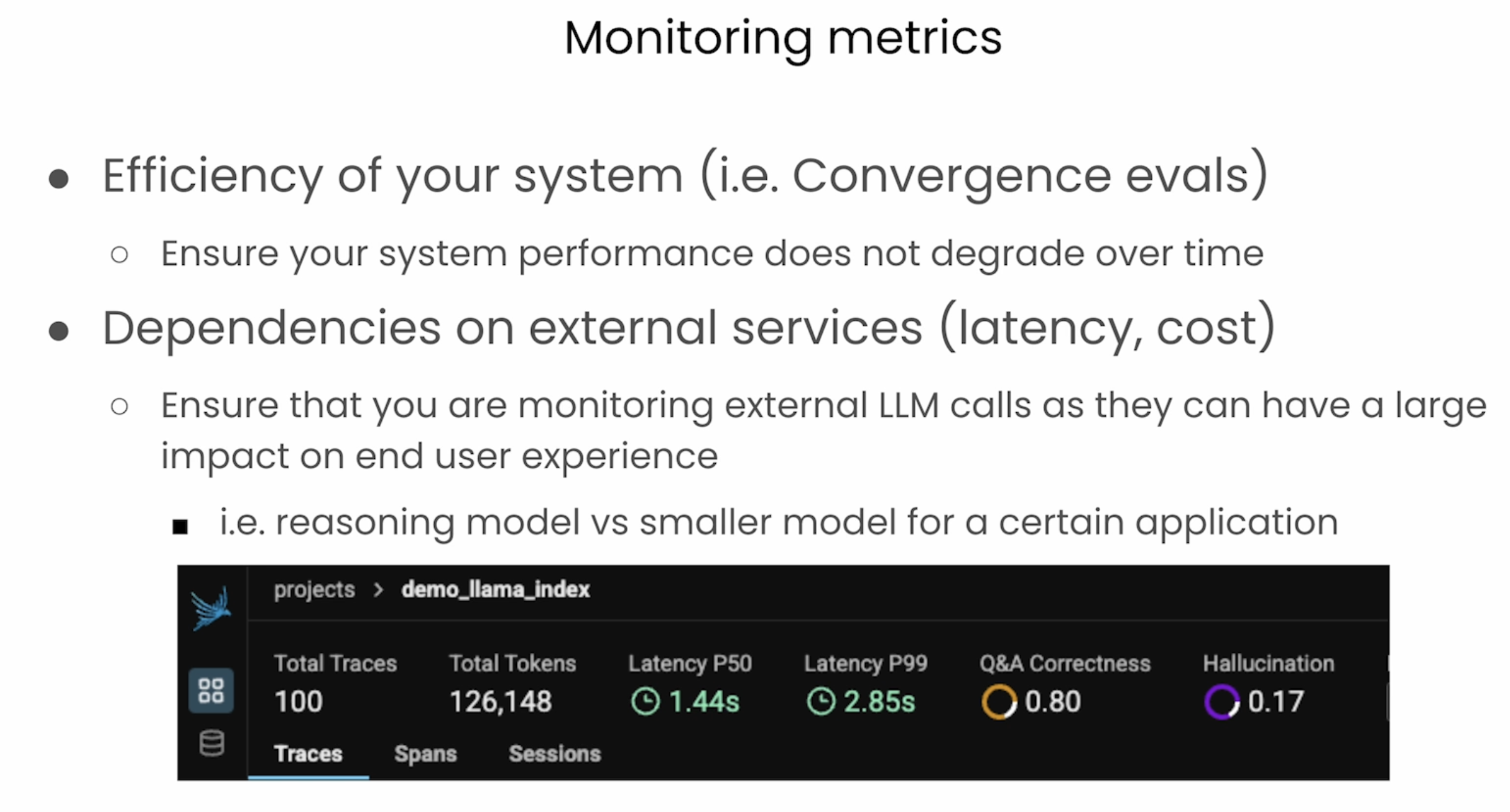

Monitoring agents

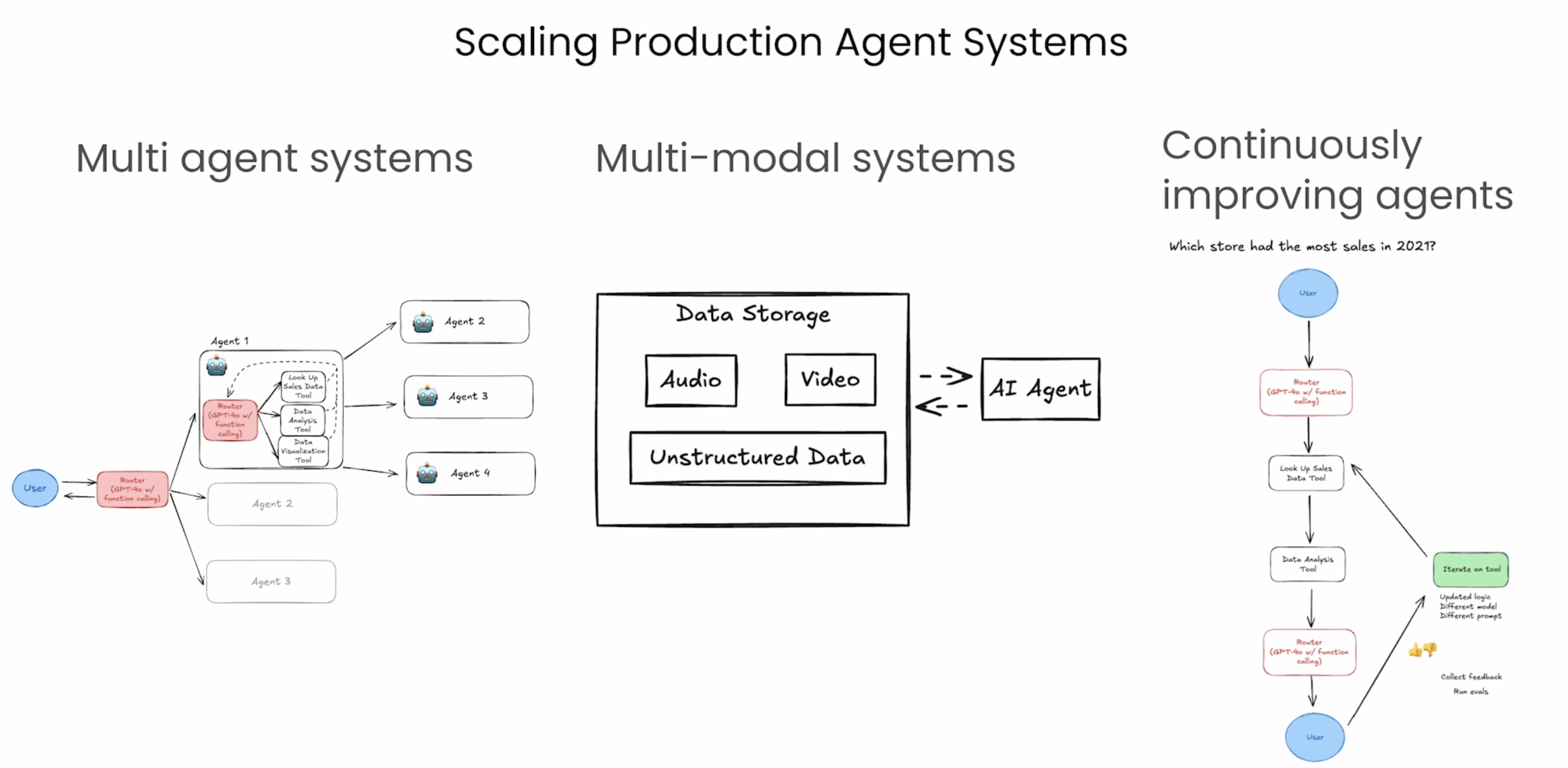

problems in production env and solutions